Displaying items by tag: Diseases and Disorders

CHEMICAL EXAMINATION OF FECES

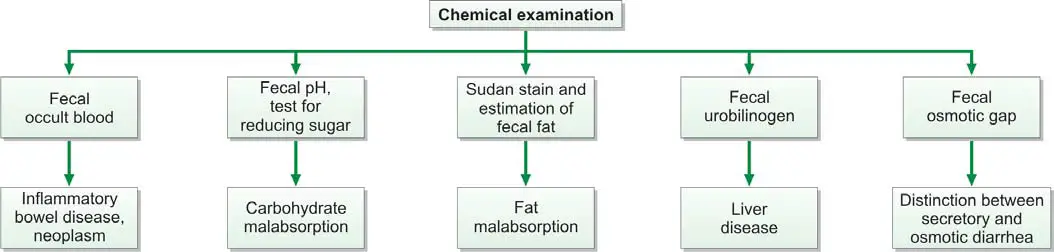

Chemical examination of feces is usually carried out for the following tests (Figure 845.1):

- Occult blood

- Excess fat excretion (malabsorption)

- Urobilinogen

- Reducing sugars

- Fecal osmotic gap

- Fecal pH

Test for Occult Blood in Stools

Presence of blood in feces which is not apparent on gross inspection and which can be detected only by chemical tests is called as occult blood. Causes of occult blood in stools are:

- Intestinal diseases: hookworms, amebiasis, typhoid fever, ulcerative colitis, intussusception, adenoma, cancer of colon or rectum.

- Gastric and esophageal diseases: peptic ulcer, gastritis, esophageal varices, hiatus hernia.

- Systemic disorders: bleeding diathesis, uremia.

- Long distance runners.

Occult blood test is recommended as a screening procedure for detection of asymptomatic colorectal cancer. Yearly examinations should be carried out after the age of 50 years. If the test is positive, endoscopy and barium enema are indicated.

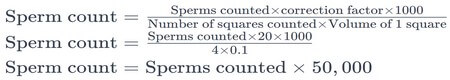

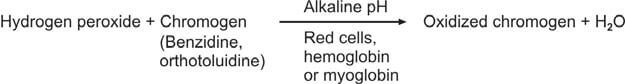

Tests for detection of occult blood in feces: Many tests are available which differ in their specificity and sensitivity. These tests include tests based on peroxidase-like activity of hemoglobin (benzidine, orthotolidine, aminophenazone, guaiac), immunochemical tests, and radioisotope tests.

Tests Based on Peroxidase-like Activity of Hemoglobin

Principle: Hemoglobin has peroxidase-like activity and releases oxygen from hydrogen peroxide. Oxygen molecule then oxidizes the chemical reagent (benzidine, orthotolidine, aminophenazone, or guaiac) to produce a colored reaction product.

Benzidine and orthotolidine are carcinogenic and are no longer used. Benzidine test is also highly sensitive and false-positive reactions are common. Since bleeding from the lesion may be intermittent, repeated testing may be required.

Causes of False-positive Tests

- Ingestion of peroxidase-containing foods like red meat, fish, poultry, turnips, horseradish, cauliflower, spinach, or cucumber. Diet should be free from peroxidase-containing foods for at least 3 days prior to testing.

- Drugs like aspirin and other anti-inflammatory drugs, which increase blood loss from gastrointestinal tract in normal persons.

Causes of False-negative Tests

- Foods containing large amounts of vitamin C.

- Conversion of all hemoglobin to acid hematin (which has no peroxidase-like activity) during passage through the gastrointestinal tract.

Immunochemical Tests

These tests specifically detect human hemoglobin. Therefore there is no interference from animal hemoglobin or myoglobin (e.g. meat) or peroxidase-containing vegetables in the diet.

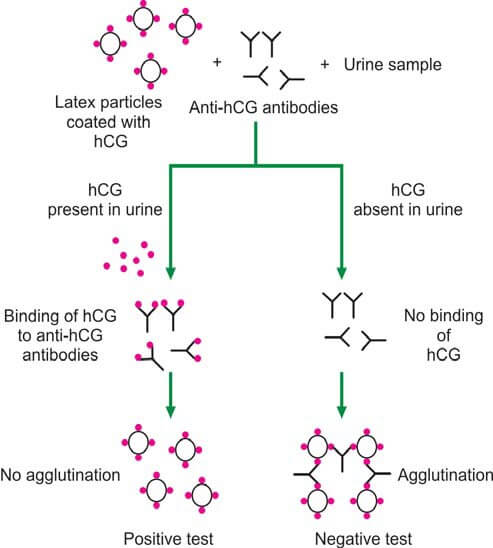

The test consists of mixing the sample with latex particles coated with anti-human haemoglobin antibody, and if agglutination occurs, test is positive. This test can detect 0.6 ml of blood per 100 grams of feces.

Radioisotope Test Using 51Cr

In this test, 10 ml of patient’s blood is withdrawn, labeled with 51Cr, and re-infused intravenously. Radioactivity is measured in fecal sample and in simultaneously collected blood specimen. Radioactivity in feces indicates gastrointestinal bleeding. Amount of blood loss can be calculated. Although the test is sensitive, it is not suitable for routine screening.

Apt test: This test is done to decide whether blood in the vomitus or in the feces of a neonate represents swallowed maternal blood or is the result of bleeding in the gastrointestinal tract. The test was devised by Dr. Apt and hence the name. The baby swallows blood during delivery or during breastfeeding if nipples are cracked. Apt test is based on the principle that if blood is of neonatal origin it will contain high proportion of hemoglobin F (Hb F) that is resistant to alkali denaturation. On the other hand, maternal blood mostly contains adult hemoglobin or Hb A that is less resistant.

Test for Malabsorption of Fat

Dietary fat is absorbed in the small intestine with the help of bile salts and pancreatic lipase. Fecal fat mainly consists of neutral fats (unsplit fats), fatty acids, and soaps (fatty acid salts). Normally very little fat is excreted in feces (<7 grams/day in adults). Excess excretion of fecal fat indicates malabsorption and is known as steatorrhea. It manifests as bulky, frothy, and foul-smelling stools, which float on the surface of water.

Causes of Malabsorption of Fat

- Deficiency of pancreatic lipase (insufficient lipolysis): chronic pancreatitis, cystic fibrosis.

- Deficiency of bile salts (insufficient emulsification of fat): biliary obstruction, severe liver disease, bile salt deconjugation due to bacterial overgrowth in the small intestine.

- Diseases of small intestine: tropical sprue, celiac disease, Whipple’s disease.

Tests for fecal fat are qualitative (i.e. direct microscopic examination after fat staining), and quantitative (i.e. estimation of fat by gravimetric or titrimetric analysis).

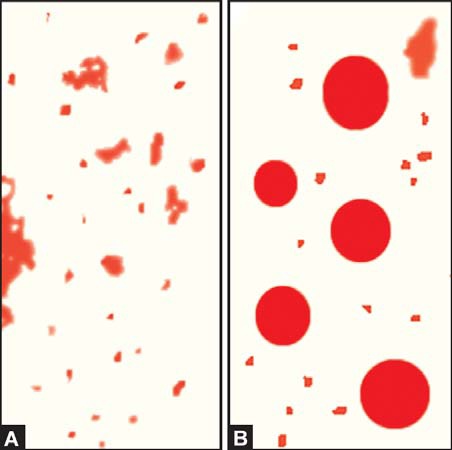

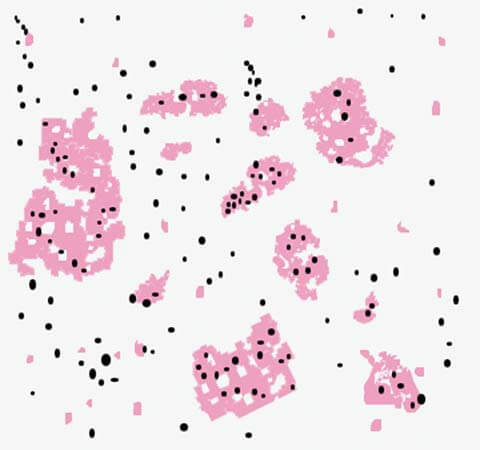

- Microscopic stool examination after staining for fat: A random specimen of stool is collected after putting the patient on a diet of >80 gm fat per day. Stool sample is stained with a fat stain (oil red O, Sudan III, or Sudan IV) and observed under the microscope for fat globules (Figure 845.2). Presence of ≥60 fat droplets/HPF indicates steatorrhea. Ingestion of mineral or castor oil and use of rectal suppositories can cause problems in interpretation.

- Quantitative estimation of fecal fat: The definitive test for diagnosis of fat malabsorption is quantitation of fecal fat. Patient should be on a diet of 70-100 gm of fat per day for 6 days before the test. Feces are collected over 72 hours and stored in a refrigerator during the collection period. Specimen should not be contaminated with urine. Fat quantitation can be done by gravimetric or titrimetric method. In gravimetric method, an accurately weighed sample of feces is emulsified, acidified, and fat is extracted in a solvent; after evaporation of solvent, fat is weighed as a pure compound. Titrimetric analysis is the most widely used method. An accurately weighed stool sample is treated with alcoholic potassium hydroxide to convert fat into soaps. Soaps are then converted to fatty acids by the addition of hydrochloric acid. Fatty acids are extracted in a solvent and the solvent is evaporated. The solution of fat made in neutral alcohol is then titrated against sodium hydroxide. Fatty acids comprise about 80% of fecal fat. Values >7 grams/day are usually abnormal. Values >14 grams/day are specific for diseases causing fat malabsorption.

Test for Urobilinogen in Feces

Fecal urobilinogen is determined by Ehrlich’s aldehyde test (see Article “Test for Detection of Urobilinogen in Urine”). Specimen should be fresh and kept protected from light. Normal amount of urobilinogen excreted in feces is 50-300 mg per day. Increased fecal excretion of urobilinogen is seen in hemolytic anemia. Urobilinogen is deceased in biliary tract obstruction, severe liver disease, oral antibiotic therapy (disturbance of intestinal bacterial flora), and aplastic anemia (low hemoglobin turnover). Stools become pale or clay-colored if urobilinogen is reduced or absent.

Test for Reducing Sugars

Deficiency of intestinal enzyme lactase is a common cause of malabsorption. Lactase converts lactose (in milk) to glucose and galactose. If lactase is deficient, lactose is converted to lactic acid with production of gas. In infants this leads to diarrhea, vomiting, and failure to thrive. Benedict’s test or Clinitest™ tablet test for reducing sugars is used to test freshly collected stool sample for lactose. In addition, oral lactose tolerance test is abnormal (after oral lactose, blood glucose fails to rise above 20 mg/dl of basal value) in lactase deficiency. Rise in blood glucose indicates that lactose has been hydrolysed and absorbed by the mucosa. Lactose tolerance test is now replaced by lactose breath hydrogen testing. In lactase deficiency, accumulated lactose in the colon is rapidly fermented to organic acids and gases like hydrogen. Hydrogen is absorbed and then excreted through the lungs into the breath. Amount of hydrogen is then measured in breath; breath hydrogen more than 20 ppm above baseline within 4 hours indicates positive test.

Fecal Osmotic Gap

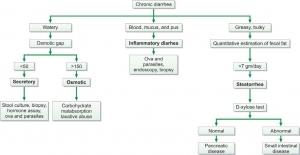

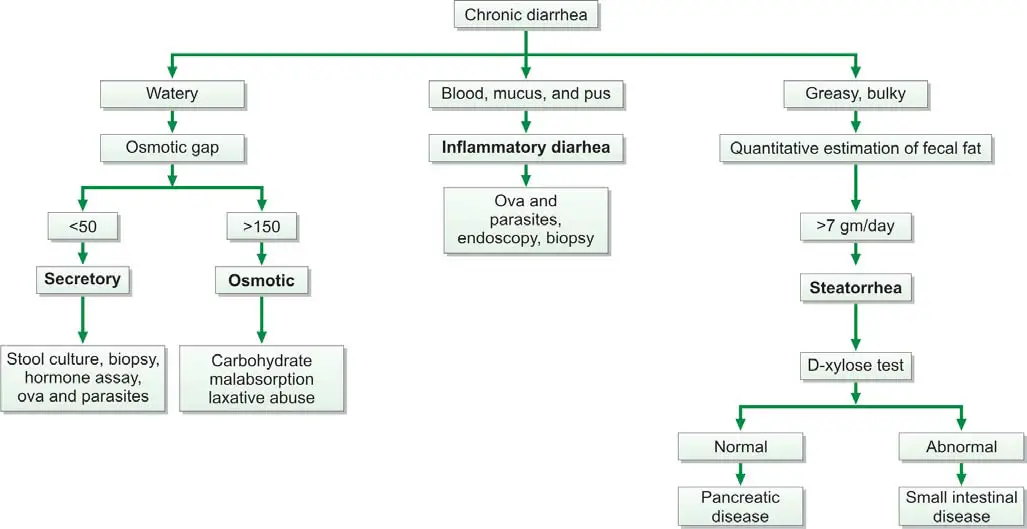

Fecal osmotic gap is calculated from concentration of electrolytes in stool water by formula 290-2([Na+] + [K+]). (290 is the assumed plasma osmolality). In osmotic diarrheas, osmotic gap is >150 mOsm/kg, while in secretory diarrhea, it is typically below 50 mOsm/kg. Evaluation of chronic diarrhea is shown in Figure 845.3.

Fecal pH

Stool pH below 5.6 is characteristic of carbohydrate malabsorption.

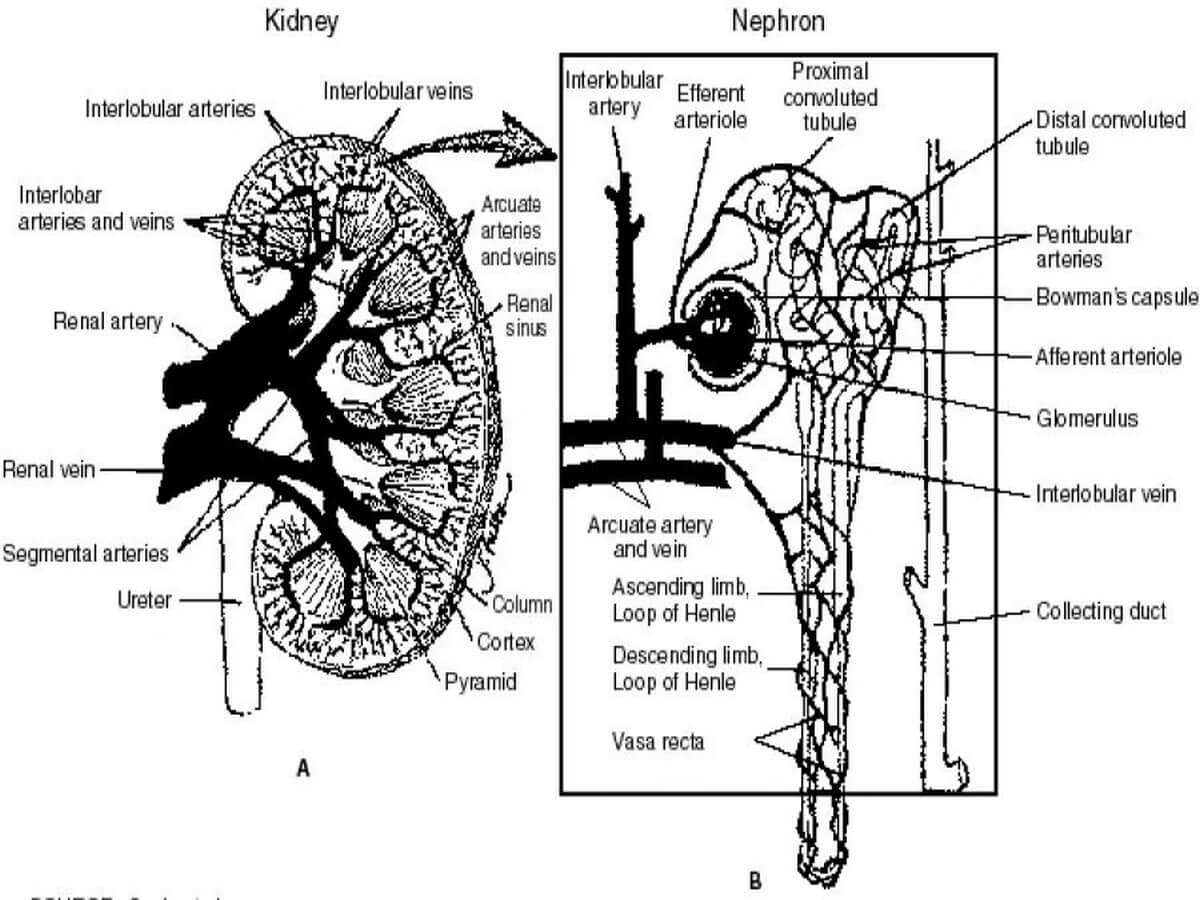

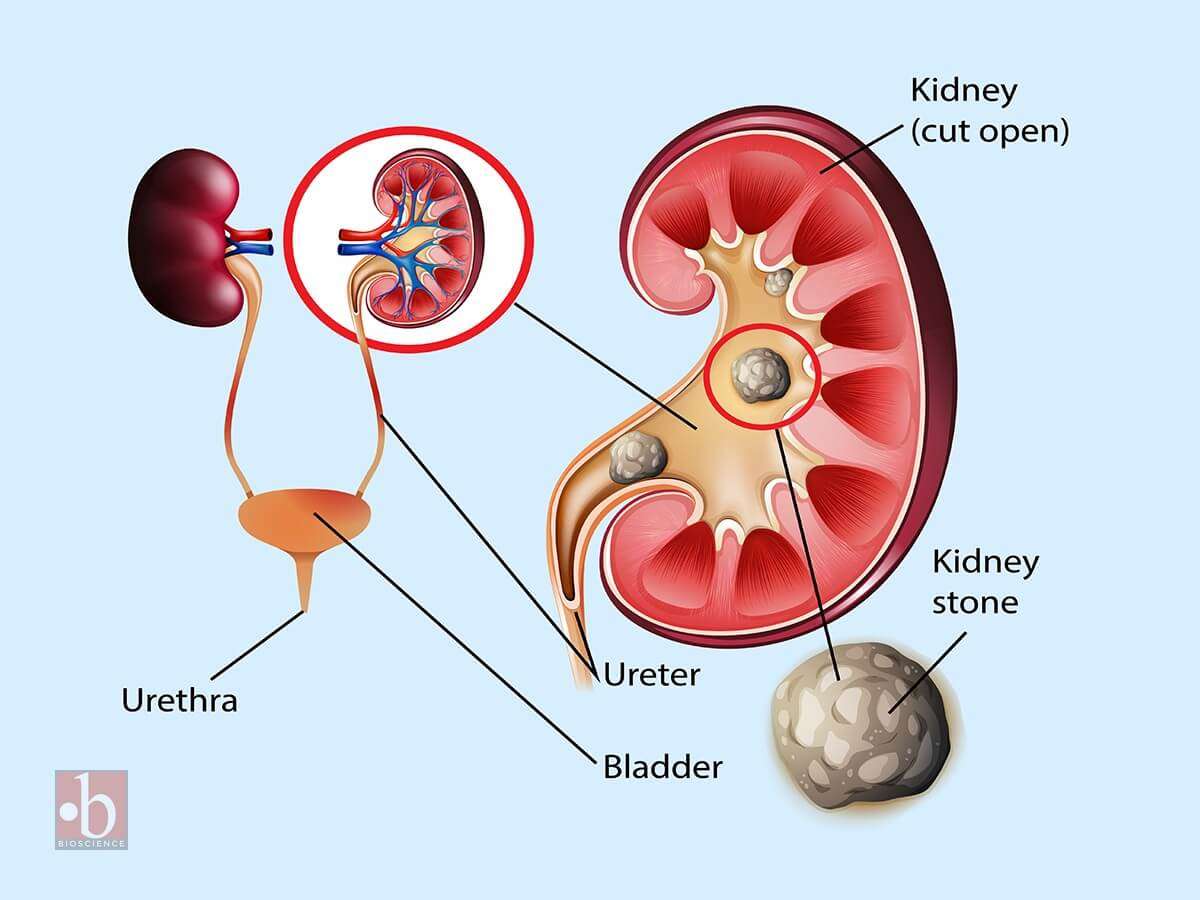

Laboratory Tests to Evaluate Tubular Function

These diagnostic assessments are designed to evaluate the performance of two crucial components of the kidney – the proximal and distal tubules. Proximal tubular function tests, such as Fractional Excretion of Sodium (FENa) and Tubular Reabsorption of Phosphate (TRP), gauge the efficiency of reabsorption in the proximal tubule. On the other hand, tests for distal tubular function, like the Urine Acidification Test, focus on the tubule's ability to maintain the body's acid-base balance. These tests play an important role in diagnosing renal disorders by providing valuable information on the specific functionalities of these intricate renal structures.

Tests to Assess Proximal Tubular Function

The renal tubules play a crucial role in reabsorbing 99% of the glomerular filtrate to retain vital substances such as glucose, amino acids, and water.

Glycosuria

Renal glycosuria manifests as the excretion of glucose in urine despite normal blood glucose levels. This occurrence results from a specific tubular lesion impairing glucose reabsorption, rendering renal glycosuria a benign condition. Notably, glycosuria may also manifest in Fanconi syndrome.

Generalized aminoaciduria

Proximal renal tubular dysfunction leads to the excretion of multiple amino acids in urine due to defective tubular reabsorption.

Tubular proteinuria (Low molecular weight proteinuria)

Under normal conditions, low molecular weight proteins, such as β2 –microglobulin, retinol-binding protein, lysozyme, and α1 –microglobulin, undergo filtration by glomeruli and complete reabsorption by proximal renal tubules. Tubular damage disrupts this process, causing the excretion of these proteins in urine, detectable by urine protein electrophoresis. Elevated levels of these proteins in urine indicate renal tubular damage.

Urinary concentration of sodium

When both blood urea nitrogen (BUN) and serum creatinine levels are acutely elevated, distinguishing between prerenal azotemia (renal underperfusion) and acute tubular necrosis becomes essential. In prerenal azotemia, renal tubules function normally, reabsorbing sodium, whereas in acute tubular necrosis, tubular function is impaired, resulting in decreased sodium absorption. Consequently, the urinary sodium concentration is < 20 mEq/L in prerenal azotemia and > 20 mEq/L in acute tubular necrosis.

Fractional excretion of sodium (FENa)

Given that urinary sodium concentration can be influenced by urine volume, calculating the fractional excretion of sodium provides a more accurate assessment. This metric represents the percentage of filtered sodium that has been absorbed and excreted. In cases of acute renal failure, especially in oliguric patients, FENa serves as a reliable means of early differentiation between pre-renal failure and renal failure due to acute tubular necrosis.

The formula for calculating FENa is as follows:

(Urine sodium × Plasma creatinine) ÷ (Plasma sodium × Urine creatinine) × 100%

In pre-renal failure, this ratio is less than 1%, reflecting maximal sodium conservation by tubules stimulated by aldosterone secretion due to reduced renal perfusion. In acute tubular necrosis, the ratio exceeds 1% since tubular cell injury hampers maximum sodium reabsorption. Ratios above 3% strongly suggest acute tubular necrosis.

Tests to Assess Distal Tubular Function

Urine specific gravity

The normal range for urine specific gravity is 1.003 to 1.030, contingent upon the individual's state of hydration and fluid intake.

- Causes of Increased Specific Gravity:

- Reduced renal perfusion (with preservation of tubular concentrating ability),

- Proteinuria,

- Glycosuria,

- Glomerulonephritis,

- Urinary tract obstruction.

- Causes of Reduced Specific Gravity:

- Diabetes insipidus,

- Chronic renal failure,

- Impaired concentrating ability due to tubular diseases.

As a test for renal function, urine specific gravity provides insights into the renal tubules' ability to concentrate the glomerular filtrate. This concentrating capability is compromised in diseases affecting the renal tubules.

A fixed specific gravity of 1.010, impervious to alteration with changes in fluid intake, serves as an indicator of chronic renal failure.

Urine osmolality

The measurement of urine/plasma osmolality stands as the most commonly employed test to assess tubular function. This method, highly sensitive to concentration ability, quantifies the number of dissolved particles in a solution. In contrast, specific gravity, measuring the total mass of solute in relation to water mass, is influenced by the number and nature of dissolved particles, making osmolality a preferred measurement. Osmolality is expressed as milliOsmol/kg of water.

When solutes are dissolved in a solvent, alterations occur in properties such as freezing point, boiling point, vapor pressure, or osmotic pressure. Osmolality measurement, conducted with an instrument known as an osmometer, captures these changes.

The urine/plasma osmolality ratio aids in distinguishing pre-renal azotemia (higher ratio) from acute renal failure due to acute tubular necrosis (lower ratio). Similar urine and plasma osmolality values indicate defective tubular reabsorption of water.

Water deprivation test

When baseline urine osmolality is inconclusive, the water deprivation test is performed. This test involves restricting water intake for a specified period, followed by the measurement of specific gravity or osmolality. In normal cases, urine osmolality should rise in response to water deprivation. Failure to increase prompts administration of desmopressin to differentiate between central and nephrogenic diabetes insipidus. A urine osmolality > 800 mOsm/kg or specific gravity ≥1.025 after dehydration indicates normal renal tubular concentration ability, although normal results do not exclude the presence of renal disease.

Results may be skewed if the patient is on a low-salt, low-protein diet or experiencing major electrolyte and water disturbances.

Water loading antidiuretic hormone suppression test

This test gauges the kidney's ability to dilute urine after water loading. After an overnight fast, the patient drinks 20 ml/kg of water in 15-30 minutes. Urine is collected hourly for 4 hours to measure volume, specific gravity, and osmolality. Plasma antidiuretic hormone levels and serum osmolality are measured at hourly intervals.

Normal results entail excreting over 90% of water in 4 hours, with specific gravity falling to 1.003 and osmolality to < 100 mOsm/kg. Impairments occur in renal function, adrenocortical insufficiency, malabsorption, obesity, ascites, congestive heart failure, cirrhosis, and dehydration. The test is contraindicated in patients with cardiac failure or kidney disease due to the risk of fatal hyponatremia in case of water load failure.

Ammonium chloride loading test (Acid load test)

Utilized in diagnosing distal or type 1 renal tubular acidosis, this test follows exclusion of other causes of metabolic acidosis. After overnight fasting, urine pH and plasma bicarbonate are measured. A pH less than 5.4 with low plasma bicarbonate confirms normal acidifying ability of renal tubules. In cases where neither of these results is obtained, further testing is warranted. The patient receives oral ammonium chloride (0.1 gm/kg) after an overnight fast, and urine samples collected hourly for 6-8 hours. Ammonium ion dissociation produces H+ and NH3, making blood acidic. A pH less than 5.4 in any sample confirms normal acidifying ability of distal tubules.

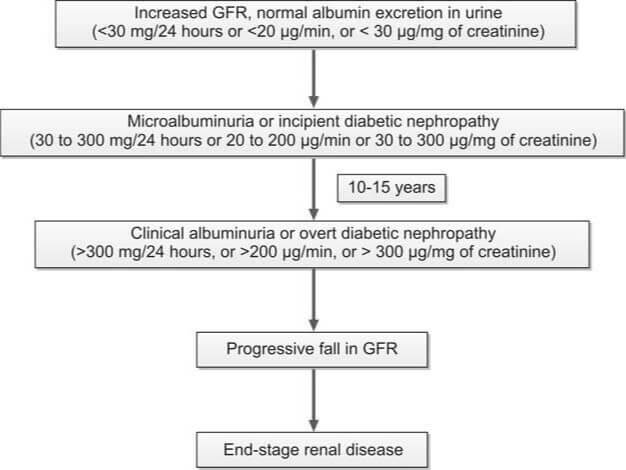

Microalbuminuria and Albuminuria

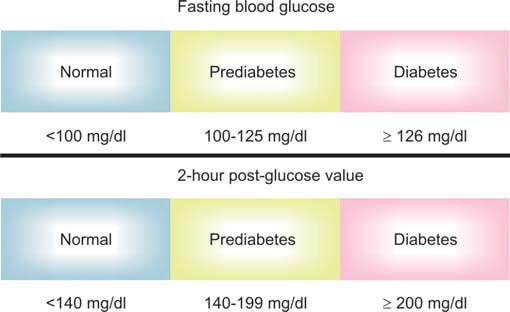

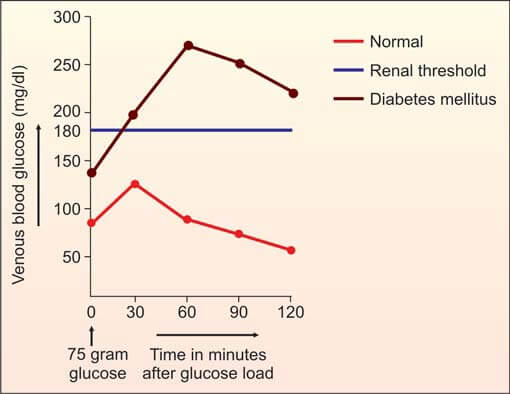

Normally, a very small amount of albumin is excreted in urine. The earliest evidence of glomerular damage in diabetes mellitus is occurrence of microalbuminuria (albuminuria in the range of 30 to 300 mg/24 hours). An albuminuria > 300-mg/24 hour is termed clinical or overt and indicates significant glomerular damage.

Microalbuminuria is a term used to describe the presence of small amounts of albumin in the urine. Albumin is a protein that is normally found in the blood, but when it appears in the urine, it can be an early sign of kidney damage. This condition is often associated with diabetes, as high blood sugar levels can damage the blood vessels in the kidneys, leading to the leakage of albumin into the urine. Additionally, microalbuminuria can also be an indicator of other underlying health issues, such as high blood pressure or cardiovascular disease.

On the other hand, albuminuria refers to the presence of larger amounts of albumin in the urine. It is often considered a more severe form of kidney damage compared to microalbuminuria. Albuminuria can be caused by a variety of factors, including diabetes, hypertension, glomerulonephritis, and certain medications. It is crucial to diagnose and monitor albuminuria as it can be a sign of progressive kidney disease and an increased risk of cardiovascular events.

Distinguishing between microalbuminuria and albuminuria is important as they have different diagnostic and clinical implications. Microalbuminuria is often considered an early warning sign of kidney damage, while albuminuria indicates more advanced kidney dysfunction. Identifying these conditions early on allows healthcare professionals to intervene and implement appropriate treatment strategies to prevent further kidney damage and manage associated health conditions.

It is also essential to differentiate albuminuria from proteinuria, another term used to describe the presence of excess protein in the urine. While albumin is a specific type of protein, proteinuria refers to the presence of any type of protein in the urine. Albuminuria is a subset of proteinuria, specifically referring to the presence of albumin. Understanding this distinction is crucial as albuminuria has specific diagnostic and prognostic implications, especially in the context of kidney disease and cardiovascular health.

To measure albuminuria levels, various techniques are available, including urine dipstick tests, spot urine albumin-to-creatinine ratio, and 24-hour urine collection. These methods allow healthcare professionals to quantify the amount of albumin in the urine and determine if it falls within the normal range or if further investigation is required. Abnormal levels of albuminuria can indicate kidney damage and the need for further evaluation and management.

Unraveling Microalbuminuria

Defining Microalbuminuria

Microalbuminuria refers to the presence of small amounts of albumin in the urine. Albumin is a protein that is normally found in the blood, but when it appears in the urine, it can be an early sign of kidney damage or dysfunction. The term "micro" in microalbuminuria signifies that the levels of albumin in the urine are relatively low, but still higher than what is considered normal.

The clinical importance of microalbuminuria lies in its association with various health conditions, particularly diabetes. In fact, microalbuminuria is often considered an early marker of kidney damage in individuals with diabetes. It serves as an indicator of the onset of diabetic nephropathy, a condition characterized by progressive kidney damage due to diabetes.

In addition to diabetes, microalbuminuria can also be seen in other conditions such as hypertension, cardiovascular disease, and certain kidney disorders. It is important to note that microalbuminuria may not always be accompanied by noticeable symptoms, making regular screening and monitoring crucial for early detection and intervention.

By identifying microalbuminuria early on, healthcare professionals can implement appropriate measures to prevent or slow down the progression of kidney damage. This may involve lifestyle modifications, such as maintaining optimal blood sugar and blood pressure levels, as well as medication management.

Furthermore, microalbuminuria can also serve as a prognostic indicator for cardiovascular disease. Studies have shown that individuals with microalbuminuria are at an increased risk of developing heart disease and experiencing cardiovascular events, such as heart attacks and strokes. Therefore, identifying and managing microalbuminuria can have broader implications for overall cardiovascular health.

What is Microalbuminuria?

Microalbuminuria is a term that is frequently used in the medical field, particularly in relation to kidney health. In this section, we will delve deeper into the precise definition of microalbuminuria and explore its clinical importance. By understanding what microalbuminuria is, we can better comprehend its implications and significance in various health conditions.

Microalbuminuria refers to the presence of small amounts of albumin in the urine. Albumin is a protein that is normally found in the blood, but when it appears in the urine, it can be an early sign of kidney damage or dysfunction. The term "micro" in microalbuminuria signifies that the levels of albumin in the urine are relatively low, but still higher than what is considered normal.

The clinical importance of microalbuminuria lies in its association with various health conditions, particularly diabetes. In fact, microalbuminuria is often considered an early marker of kidney damage in individuals with diabetes. It serves as an indicator of the onset of diabetic nephropathy, a condition characterized by progressive kidney damage due to diabetes.

In addition to diabetes, microalbuminuria can also be seen in other conditions such as hypertension, cardiovascular disease, and certain kidney disorders. It is important to note that microalbuminuria may not always be accompanied by noticeable symptoms, making regular screening and monitoring crucial for early detection and intervention.

By identifying microalbuminuria early on, healthcare professionals can implement appropriate measures to prevent or slow down the progression of kidney damage. This may involve lifestyle modifications, such as maintaining optimal blood sugar and blood pressure levels, as well as medication management.

Furthermore, microalbuminuria can also serve as a prognostic indicator for cardiovascular disease. Studies have shown that individuals with microalbuminuria are at an increased risk of developing heart disease and experiencing cardiovascular events, such as heart attacks and strokes. Therefore, identifying and managing microalbuminuria can have broader implications for overall cardiovascular health.

It is worth noting that microalbuminuria is different from proteinuria, which refers to the presence of larger amounts of protein in the urine. While both conditions indicate kidney damage, microalbuminuria specifically refers to the presence of albumin, whereas proteinuria encompasses a broader range of proteins.

Clinical Importance

Microalbuminuria is not just a random occurrence; it holds significant clinical importance in the field of medicine. By understanding the medical implications and relevance of microalbuminuria, healthcare professionals can better assess and manage various health conditions. In this section, we will delve deeper into the clinical importance of microalbuminuria and its implications for patient care.

One of the primary clinical implications of microalbuminuria is its association with kidney damage. As mentioned earlier, the presence of albumin in the urine can be an early sign of kidney dysfunction. In individuals with diabetes, microalbuminuria serves as an early marker of diabetic nephropathy, a condition characterized by progressive kidney damage due to diabetes. By detecting microalbuminuria, healthcare professionals can intervene early and implement measures to slow down the progression of kidney damage.

Moreover, microalbuminuria is not limited to diabetes alone. It can also be seen in individuals with hypertension, cardiovascular disease, and certain kidney disorders. Regular screening for microalbuminuria in these populations is crucial for early detection and intervention. By identifying microalbuminuria in individuals with these conditions, healthcare professionals can implement appropriate measures to prevent or manage kidney damage, ultimately improving patient outcomes.

In addition to its role in assessing kidney health, microalbuminuria also has broader implications for cardiovascular disease. Studies have shown that individuals with microalbuminuria are at an increased risk of developing heart disease and experiencing cardiovascular events, such as heart attacks and strokes. Therefore, identifying and managing microalbuminuria can have significant implications for overall cardiovascular health. By monitoring microalbuminuria levels and implementing appropriate interventions, healthcare professionals can help reduce the risk of cardiovascular complications in at-risk individuals.

Furthermore, microalbuminuria can serve as a prognostic indicator for overall health and well-being. Its presence can indicate underlying systemic inflammation and endothelial dysfunction, both of which are associated with various health conditions. By identifying microalbuminuria, healthcare professionals can further investigate the underlying causes and implement targeted interventions to address these systemic issues. This comprehensive approach to patient care can lead to improved overall health outcomes and a better quality of life for individuals with microalbuminuria.

Microalbuminuria in Diabetes

The Link with Diabetes

Investigating the connection between microalbuminuria and diabetes, it becomes evident that these two conditions are closely intertwined. Diabetes, a chronic metabolic disorder characterized by high blood sugar levels, can have significant implications on kidney health. In fact, microalbuminuria is often considered an early sign of diabetic kidney disease, also known as diabetic nephropathy.

Diabetic nephropathy is a progressive kidney disease that occurs as a result of long-standing diabetes. It is estimated that approximately 30-40% of individuals with diabetes will develop diabetic nephropathy, making it one of the leading causes of end-stage renal disease worldwide. Microalbuminuria serves as a crucial marker in identifying the onset and progression of this condition.

When diabetes is poorly controlled, high levels of glucose in the blood can damage the delicate blood vessels in the kidneys. These blood vessels, known as glomeruli, play a vital role in filtering waste products and excess fluid from the blood. The damage to the glomeruli leads to increased permeability, allowing small amounts of albumin, a protein normally found in the blood, to leak into the urine. This leakage of albumin is what characterizes microalbuminuria.

The presence of microalbuminuria in individuals with diabetes is a red flag, indicating that the kidneys are not functioning optimally. It serves as an early warning sign of potential kidney damage and the progression to more severe forms of kidney disease. Therefore, regular screening for microalbuminuria is recommended for individuals with diabetes to detect kidney dysfunction at an early stage.

Moreover, microalbuminuria is not only a marker of kidney damage but also a predictor of cardiovascular disease in individuals with diabetes. Studies have shown that the presence of microalbuminuria is associated with an increased risk of developing heart disease, stroke, and other cardiovascular complications. This highlights the importance of identifying and managing microalbuminuria in diabetic individuals to prevent the onset of these life-threatening conditions.

The link between microalbuminuria and diabetes is multifactorial. Apart from high blood glucose levels, other factors such as high blood pressure, smoking, and genetic predisposition can further contribute to the development and progression of microalbuminuria in individuals with diabetes. Therefore, it is crucial for healthcare professionals to address these risk factors comprehensively and provide appropriate management strategies to prevent or delay the progression of kidney disease.

Microalbuminuria serves as a crucial link between diabetes and kidney health. It acts as an early indicator of diabetic nephropathy and is associated with an increased risk of cardiovascular disease. Regular screening for microalbuminuria in individuals with diabetes is essential to detect kidney dysfunction at an early stage and implement appropriate interventions to prevent further complications. By understanding the link between microalbuminuria and diabetes, healthcare professionals can take proactive measures to protect the kidney and cardiovascular health of diabetic individuals.

Causes and Mechanisms

Understanding the underlying causes and mechanisms leading to microalbuminuria in diabetic individuals is crucial for effective management and prevention of kidney disease. Several factors contribute to the development of microalbuminuria in diabetes, including:

- Glomerular Damage: The primary cause of microalbuminuria in diabetes is damage to the glomeruli, the tiny blood vessels in the kidneys responsible for filtering waste products. High blood glucose levels, along with other factors such as high blood pressure and inflammation, can lead to the thickening and narrowing of the glomerular walls. This damages the filtration system, allowing albumin to leak into the urine.

- Increased Permeability: In diabetes, the glomerular filtration barrier becomes more permeable, allowing larger molecules like albumin to pass through. This increased permeability is due to the disruption of the podocytes, specialized cells that line the glomerular walls and help maintain the filtration barrier. The loss of podocyte function leads to the leakage of albumin into the urine.

- Oxidative Stress: Diabetes is associated with increased oxidative stress, which occurs when there is an imbalance between the production of harmful free radicals and the body's ability to neutralize them. Oxidative stress can damage the delicate structures of the kidneys, including the glomeruli, leading to microalbuminuria.

- Inflammation: Chronic inflammation plays a significant role in the development and progression of microalbuminuria in diabetes. Inflammatory processes can cause damage to the glomeruli and impair their function, resulting in the leakage of albumin into the urine.

- Endothelial Dysfunction: Diabetes affects the endothelial cells lining the blood vessels, including those in the glomeruli. Endothelial dysfunction leads to impaired regulation of blood flow and increased permeability of the glomerular filtration barrier, contributing to microalbuminuria.

- Renin-Angiotensin System (RAS) Activation: In diabetes, the renin-angiotensin system, which regulates blood pressure and fluid balance, becomes overactive. This activation leads to constriction of the blood vessels in the kidneys and increased production of angiotensin II, a hormone that promotes inflammation and fibrosis. These changes further contribute to glomerular damage and microalbuminuria.

- Genetic Predisposition: Some individuals may have a genetic predisposition to developing microalbuminuria in diabetes. Certain gene variants can affect the structure and function of the glomeruli, making them more susceptible to damage and albumin leakage.

Understanding these underlying causes and mechanisms is essential for targeted interventions to prevent or delay the progression of microalbuminuria in diabetic individuals. By addressing factors such as blood glucose control, blood pressure management, and inflammation reduction, healthcare professionals can help minimize glomerular damage and preserve kidney function.

In addition to lifestyle modifications, medications that target the renin-angiotensin system, such as angiotensin-converting enzyme inhibitors (ACE inhibitors) and angiotensin receptor blockers (ARBs), are commonly prescribed to individuals with microalbuminuria. These medications help reduce blood pressure, protect the glomeruli, and slow the progression of kidney disease.

Microalbuminuria in diabetes is caused by a combination of glomerular damage, increased permeability, oxidative stress, inflammation, endothelial dysfunction, RAS activation, and genetic predisposition. Understanding these causes and mechanisms is crucial for implementing effective strategies to prevent and manage microalbuminuria in diabetic individuals. By addressing these underlying factors, healthcare professionals can help preserve kidney function and reduce the risk of complications associated with microalbuminuria and diabetic kidney disease.

Deciphering Albuminuria

Defining Albuminuria

Albuminuria is a term that is often used in the medical field, particularly in relation to kidney health. In this section, we will delve deeper into the definition of albuminuria and explore its implications. By understanding what albuminuria is, we can gain valuable insights into its clinical significance and diagnostic value.

Albuminuria refers to the presence of albumin in the urine. Albumin is a protein that is normally found in the blood, but when it appears in the urine, it can be an indication of kidney damage or dysfunction. The kidneys play a crucial role in filtering waste products from the blood and maintaining the balance of fluids and electrolytes in the body. When the kidneys are functioning properly, they prevent the passage of albumin into the urine. However, when there is damage to the kidneys, the filtration process is compromised, leading to the leakage of albumin into the urine.

The presence of albumin in the urine can be an early sign of kidney disease or other underlying health conditions. It is important to note that albuminuria is not a disease itself, but rather a marker of kidney damage. By detecting albuminuria, healthcare professionals can identify individuals who may be at risk of developing kidney disease or who may already have kidney damage.

Distinguishing albuminuria from microalbuminuria is essential. While both terms refer to the presence of albumin in the urine, microalbuminuria specifically refers to a lower level of albumin in the urine. Microalbuminuria is often used as an early marker for kidney damage, particularly in individuals with diabetes. On the other hand, albuminuria generally indicates more significant kidney damage or dysfunction.

Understanding the medical terminology associated with albuminuria is crucial for accurate diagnosis and communication between healthcare professionals. By using standardized terminology, healthcare providers can ensure consistency in their assessments and interpretations of albuminuria. This allows for better collaboration and understanding among medical professionals, ultimately leading to improved patient care.

The diagnostic significance of albuminuria cannot be overstated. It serves as an important tool for identifying individuals at risk of kidney disease and monitoring the progression of existing kidney conditions. By measuring albuminuria levels, healthcare professionals can assess the severity of kidney damage and determine appropriate treatment plans. Additionally, albuminuria can also be an indicator of other health conditions, such as cardiovascular disease, hypertension, and diabetes.

Albuminuria is the presence of albumin in the urine and serves as a marker of kidney damage or dysfunction. It is important to differentiate between albuminuria and microalbuminuria, as they indicate different levels of albumin in the urine. Understanding the medical terminology associated with albuminuria is crucial for accurate diagnosis and effective communication among healthcare professionals. The diagnostic significance of albuminuria cannot be overlooked, as it provides valuable insights into kidney health and the presence of other underlying health conditions.

What is Albuminuria?

Albuminuria is a term that is frequently used in the medical field, particularly in relation to kidney health. It refers to the presence of albumin in the urine, which can be an indication of kidney damage or dysfunction. Albumin, a protein normally found in the blood, should not be present in the urine under normal circumstances. When albumin appears in the urine, it suggests that the kidneys are not functioning properly and are allowing the leakage of this protein.

Understanding what albuminuria is can provide valuable insights into its clinical significance and diagnostic value. While albuminuria itself is not a disease, it serves as a marker of kidney damage or dysfunction. By detecting albuminuria, healthcare professionals can identify individuals who may be at risk of developing kidney disease or who may already have kidney damage.

Distinguishing albuminuria from microalbuminuria is essential. Both terms refer to the presence of albumin in the urine, but microalbuminuria specifically refers to a lower level of albumin in the urine. Microalbuminuria is often used as an early marker for kidney damage, particularly in individuals with diabetes. On the other hand, albuminuria generally indicates more significant kidney damage or dysfunction.

The medical terminology associated with albuminuria is crucial for accurate diagnosis and effective communication among healthcare professionals. By using standardized terminology, healthcare providers can ensure consistency in their assessments and interpretations of albuminuria. This allows for better collaboration and understanding among medical professionals, ultimately leading to improved patient care.

The diagnostic significance of albuminuria cannot be overstated. It serves as an important tool for identifying individuals at risk of kidney disease and monitoring the progression of existing kidney conditions. By measuring albuminuria levels, healthcare professionals can assess the severity of kidney damage and determine appropriate treatment plans. Additionally, albuminuria can also be an indicator of other health conditions, such as cardiovascular disease, hypertension, and diabetes.

Albuminuria is the presence of albumin in the urine and serves as a marker of kidney damage or dysfunction. Distinguishing albuminuria from microalbuminuria is crucial, as they indicate different levels of albumin in the urine. Understanding the medical terminology associated with albuminuria is essential for accurate diagnosis and effective communication among healthcare professionals. The diagnostic significance of albuminuria cannot be overlooked, as it provides valuable insights into kidney health and the presence of other underlying health conditions. By detecting albuminuria, healthcare professionals can identify individuals at risk and take appropriate measures to prevent further kidney damage or manage existing conditions.

Distinguishing Albuminuria from Microalbuminuria

When it comes to understanding kidney health, it is important to differentiate between albuminuria and microalbuminuria. While both terms refer to the presence of albumin in the urine, there are key differences that set them apart. Let's explore these differences and understand why they are significant.

Albuminuria, as we discussed earlier, is the presence of albumin in the urine. It is an indication of kidney damage or dysfunction and suggests that the kidneys are not functioning properly. On the other hand, microalbuminuria specifically refers to a lower level of albumin in the urine. It is often used as an early marker for kidney damage, particularly in individuals with diabetes.

One of the main differences between albuminuria and microalbuminuria is the level of albumin present in the urine. Microalbuminuria is characterized by a relatively low level of albumin, usually between 30-300 mg per day. This lower level of albumin can be detected through specialized tests that are more sensitive to small amounts of albumin in the urine.

Albuminuria, on the other hand, generally indicates more significant kidney damage or dysfunction. The level of albumin in the urine is usually higher, exceeding 300 mg per day. This higher level of albumin suggests that the kidneys are experiencing more severe impairment and are unable to properly filter out the protein.

Another important distinction between albuminuria and microalbuminuria is their clinical significance. Microalbuminuria is often used as an early marker for kidney damage, particularly in individuals with diabetes. It can serve as a warning sign that the kidneys are not functioning optimally and that further damage may occur if appropriate measures are not taken.

Albuminuria, on the other hand, generally indicates more advanced kidney damage or dysfunction. It is associated with a higher risk of developing kidney disease and other complications. Detecting albuminuria is crucial for healthcare professionals to identify individuals who may require more intensive monitoring and treatment to prevent further kidney damage.

In terms of diagnostic value, both albuminuria and microalbuminuria play important roles. By measuring albuminuria levels, healthcare professionals can assess the severity of kidney damage and determine appropriate treatment plans. Microalbuminuria, in particular, can help identify individuals who may benefit from early interventions to prevent the progression of kidney disease.

It is worth noting that albuminuria can also be an indicator of other health conditions, such as cardiovascular disease, hypertension, and diabetes. Therefore, detecting albuminuria can provide valuable insights into a patient's overall health and help healthcare professionals identify and manage these underlying conditions.

Distinguishing between albuminuria and microalbuminuria is crucial for understanding kidney health and identifying individuals at risk of kidney disease. While both terms refer to the presence of albumin in the urine, microalbuminuria specifically indicates a lower level of albumin and serves as an early marker for kidney damage. Albuminuria, on the other hand, generally indicates more significant kidney damage or dysfunction. By detecting and monitoring albuminuria levels, healthcare professionals can assess the severity of kidney damage, identify underlying health conditions, and determine appropriate treatment plans.

Clinical Albuminuria

Medical Terminology

Examining albuminuria as a medical term and its application in clinical settings, it is important to understand the significance of this condition in diagnosing and managing various health conditions. Albuminuria refers to the presence of excessive amounts of albumin, a protein, in the urine. This condition is often an indicator of kidney damage or dysfunction.

Albuminuria is a term commonly used by healthcare professionals to describe the presence of albumin in the urine. It is an important diagnostic marker for kidney diseases, particularly those affecting the glomeruli, the tiny blood vessels in the kidneys responsible for filtering waste products from the blood. When the glomeruli are damaged, they may allow albumin to leak into the urine, leading to albuminuria.

The presence of albuminuria can be an early sign of kidney damage, even before other symptoms become apparent. It is often associated with conditions such as diabetes, hypertension, and chronic kidney disease. Monitoring albuminuria levels can help healthcare providers assess the progression of these conditions and make informed decisions regarding treatment and management.

In clinical settings, albuminuria is measured using various methods, including urine dipstick tests and laboratory analysis. These tests detect the presence of albumin in the urine and provide an indication of the severity of albuminuria. Normal levels of albumin in the urine are typically less than 30 milligrams per gram of creatinine (mg/g). Higher levels may indicate kidney damage or dysfunction.

The diagnostic significance of albuminuria lies in its ability to identify individuals at risk of developing kidney disease or those who already have kidney damage. It serves as a valuable tool for healthcare providers to assess kidney function and determine the appropriate course of action. By monitoring albuminuria levels over time, healthcare professionals can track the progression of kidney disease and make necessary adjustments to treatment plans.

Furthermore, albuminuria can also be used to assess the effectiveness of interventions aimed at reducing kidney damage. For example, in individuals with diabetes, tight control of blood glucose levels and blood pressure can help prevent or delay the onset of kidney disease. Regular monitoring of albuminuria levels can provide valuable feedback on the success of these interventions and guide further treatment decisions.

Diagnostic Significance

Albuminuria plays a crucial role in medical assessments as it holds significant diagnostic value. By detecting the presence of excessive amounts of albumin in the urine, healthcare professionals can gain valuable insights into the underlying health conditions and make informed decisions regarding treatment and management. This section will delve into the diagnostic significance of albuminuria and its implications in clinical practice.

One of the primary uses of albuminuria as a diagnostic marker is in identifying individuals at risk of developing kidney disease or those who already have kidney damage. As mentioned earlier, albuminuria is often associated with conditions such as diabetes, hypertension, and chronic kidney disease. Monitoring albuminuria levels can help healthcare providers assess the progression of these conditions and determine the appropriate course of action. By identifying albuminuria early on, interventions can be implemented to prevent or delay the onset of kidney disease, leading to improved patient outcomes.

In addition to kidney disease, albuminuria can also serve as an indicator of other systemic conditions. For example, it has been found that albuminuria is associated with cardiovascular disease. Studies have shown that individuals with albuminuria are at a higher risk of developing heart disease, stroke, and other cardiovascular events. Therefore, by monitoring albuminuria levels, healthcare professionals can identify individuals who may benefit from further cardiovascular assessments and interventions.

Furthermore, albuminuria can provide valuable information about the effectiveness of interventions aimed at reducing kidney damage and improving overall health. For instance, in individuals with diabetes, tight control of blood glucose levels and blood pressure can help prevent or delay the onset of kidney disease. Regular monitoring of albuminuria levels can serve as a feedback mechanism to assess the success of these interventions. If albuminuria levels decrease over time, it indicates that the interventions are effective in preserving kidney function and reducing the risk of complications.

Another diagnostic significance of albuminuria lies in its ability to differentiate between different types of kidney diseases. While albuminuria is primarily associated with glomerular damage, proteinuria, which refers to the presence of excessive amounts of protein in the urine, can be indicative of tubular damage. By distinguishing between albuminuria and proteinuria, healthcare professionals can narrow down the potential causes of kidney dysfunction and tailor treatment plans accordingly.

Albuminuria holds significant diagnostic significance in medical assessments. By monitoring albuminuria levels, healthcare professionals can identify individuals at risk of developing kidney disease, assess the progression of existing conditions, and make informed decisions regarding treatment and management. Additionally, albuminuria can serve as an indicator of other systemic conditions, such as cardiovascular disease. Furthermore, albuminuria can provide valuable information about the effectiveness of interventions aimed at reducing kidney damage. By understanding the diagnostic significance of albuminuria, healthcare professionals can utilize this information to improve patient outcomes and tailor treatment plans for optimal results.

Comparative Analysis

Albuminuria vs Proteinuria

Differentiating Albuminuria and Proteinuria

Albuminuria and proteinuria are two terms often used interchangeably, but they actually refer to different conditions. In this section, we will delve into the distinctions between albuminuria and proteinuria, shedding light on their differences and clinical implications.

Albuminuria, as we discussed earlier, is the presence of albumin in the urine. It is a specific type of proteinuria, where the protein being excreted is primarily albumin. On the other hand, proteinuria refers to the presence of any type of protein in the urine, not just albumin. While albumin is the most common protein found in urine, proteinuria can also include other proteins such as globulins and enzymes.

One key difference between albuminuria and proteinuria lies in their diagnostic significance. Albuminuria is often considered an early sign of kidney damage, particularly in the context of diabetes. It is a sensitive marker for detecting early kidney dysfunction and can be an indicator of increased cardiovascular risk. On the other hand, proteinuria, especially when it involves other proteins besides albumin, may indicate more severe kidney damage or underlying systemic conditions.

Another important distinction is the measurement techniques used to assess albuminuria and proteinuria levels. Albuminuria is typically measured using a urine albumin-to-creatinine ratio (ACR) or a spot urine albumin test. These tests provide a quantitative assessment of the amount of albumin present in the urine. Proteinuria, on the other hand, is often measured using a 24-hour urine collection or a spot urine protein test. These tests provide a broader assessment of all types of proteins present in the urine.

Clinical implications also differ between albuminuria and proteinuria. Albuminuria, particularly in the context of diabetes, is associated with an increased risk of developing kidney disease and cardiovascular complications. It is an important marker for monitoring the progression of kidney disease and guiding treatment decisions. Proteinuria, especially when it involves other proteins besides albumin, may indicate more severe kidney damage and can be a sign of underlying systemic conditions such as autoimmune diseases or infections.

In summary, while albuminuria and proteinuria are related terms, they have distinct differences. Albuminuria specifically refers to the presence of albumin in the urine and is often considered an early sign of kidney damage, particularly in diabetes. Proteinuria, on the other hand, encompasses the presence of any type of protein in the urine and may indicate more severe kidney damage or underlying systemic conditions. The measurement techniques, diagnostic significance, and clinical implications of albuminuria and proteinuria also vary. Understanding these differences is crucial for accurate diagnosis and appropriate management of kidney-related conditions.

Clinical Implications

Understanding the clinical significance of distinguishing between albuminuria and proteinuria is crucial for accurate diagnosis and appropriate management of kidney-related conditions. While these terms are often used interchangeably, they have distinct differences that impact their diagnostic value and treatment implications.

Albuminuria, as we discussed earlier, refers specifically to the presence of albumin in the urine. It is a sensitive marker for detecting early kidney dysfunction, particularly in the context of diabetes. The measurement of albuminuria levels, usually done through a urine albumin-to-creatinine ratio (ACR) or a spot urine albumin test, provides a quantitative assessment of the amount of albumin excreted in the urine. This information is valuable in monitoring the progression of kidney disease and guiding treatment decisions.

The clinical implications of albuminuria extend beyond kidney health. Research has shown that albuminuria is associated with an increased risk of developing cardiovascular complications. It serves as an important marker for identifying individuals at higher risk of heart disease and stroke. By detecting albuminuria early on, healthcare providers can implement interventions to reduce cardiovascular risk factors and improve patient outcomes.

On the other hand, proteinuria encompasses the presence of any type of protein in the urine, not just albumin. While albumin is the most common protein found in urine, proteinuria can also include other proteins such as globulins and enzymes. The measurement of proteinuria levels, often done through a 24-hour urine collection or a spot urine protein test, provides a broader assessment of all types of proteins present in the urine.

The presence of proteinuria, especially when it involves proteins other than albumin, may indicate more severe kidney damage or underlying systemic conditions. It can be a sign of advanced kidney disease or other health issues such as autoimmune diseases or infections. Identifying proteinuria and determining its underlying cause is essential for appropriate management and treatment planning.

Differentiating between albuminuria and proteinuria is not only important for diagnostic purposes but also for monitoring treatment response. For example, in individuals with diabetes, reducing albuminuria levels is a key treatment goal. By closely monitoring albuminuria levels over time, healthcare providers can assess the effectiveness of interventions such as blood pressure control, glucose management, and medication adjustments.

Moreover, the distinction between albuminuria and proteinuria has implications for research and clinical trials. Studies focusing on albuminuria as an endpoint can provide valuable insights into the efficacy of interventions in preventing or slowing the progression of kidney disease. By specifically targeting albuminuria reduction, researchers can evaluate the impact of interventions on kidney health and cardiovascular outcomes.

Quantifying Albuminuria Levels

Measurement Techniques

Methods for Assessing Albuminuria Levels

Assessing albuminuria levels is crucial in diagnosing and monitoring kidney function. Various techniques are employed to accurately measure albuminuria, providing valuable insights into the health of the kidneys. In this section, we will delve into the different methods used to assess albuminuria levels and their significance in clinical practice.

One commonly used method for assessing albuminuria levels is the urine albumin-to-creatinine ratio (UACR). This test measures the amount of albumin in the urine relative to the amount of creatinine, a waste product produced by the muscles. The UACR is a simple and convenient test that can be performed on a random urine sample. It is widely used in clinical settings due to its accuracy and reliability in detecting albuminuria.

Another method used to assess albuminuria levels is the 24-hour urine collection. This method involves collecting all urine produced over a 24-hour period and measuring the amount of albumin present. The 24-hour urine collection provides a more accurate assessment of albuminuria levels as it takes into account the variations in urine production throughout the day. However, this method can be cumbersome for patients and may lead to incomplete or inaccurate collections.

In addition to these methods, there are also semi-quantitative tests available for assessing albuminuria levels. These tests, such as the dipstick test, provide a qualitative assessment of albuminuria by detecting the presence or absence of albumin in the urine. While these tests are less precise than quantitative methods, they can still be useful in screening for albuminuria in certain situations.

It is important to note that albuminuria levels can vary throughout the day and may be influenced by factors such as physical activity, diet, and medication. Therefore, it is recommended to perform multiple measurements over time to obtain a more accurate assessment of albuminuria levels. This longitudinal approach helps to account for any fluctuations and provides a clearer picture of kidney function.

The interpretation of albuminuria levels depends on the specific method used for assessment. Generally, albuminuria levels are classified into three categories: normal, microalbuminuria, and macroalbuminuria. Normal levels indicate that the kidneys are functioning properly, while microalbuminuria and macroalbuminuria indicate varying degrees of kidney damage. Microalbuminuria refers to a slightly elevated level of albumin in the urine, which can be an early sign of kidney dysfunction. Macroalbuminuria, on the other hand, indicates a significant increase in albumin levels and is often associated with more advanced kidney disease.

Assessing albuminuria levels is essential for evaluating kidney function. The urine albumin-to-creatinine ratio, 24-hour urine collection, and semi-quantitative tests are commonly used methods for measuring albuminuria. Each method has its advantages and limitations, and multiple measurements over time are recommended for a more accurate assessment. Understanding albuminuria levels helps healthcare professionals diagnose and monitor kidney disease, enabling timely intervention and management.

Normal vs Abnormal Levels

When assessing albuminuria levels, it is important to establish the parameters that determine whether the levels are within the normal range or indicate abnormal kidney function. By understanding these parameters, healthcare professionals can accurately diagnose and monitor kidney disease. In this section, we will explore the criteria used to classify albuminuria levels as normal or abnormal.

The classification of albuminuria levels is primarily based on the amount of albumin present in the urine. Normal levels of albumin in the urine indicate that the kidneys are functioning properly and there is no evidence of kidney damage. On the other hand, abnormal levels of albumin in the urine suggest varying degrees of kidney dysfunction.

Microalbuminuria is a term used to describe slightly elevated levels of albumin in the urine. It is considered an early sign of kidney dysfunction and can be an indicator of early-stage kidney disease. The threshold for diagnosing microalbuminuria varies depending on the method used for assessment. The urine albumin-to-creatinine ratio (UACR) is commonly used to determine microalbuminuria levels. Generally, a UACR between 30-300 mg/g is considered indicative of microalbuminuria. However, it is important to note that different guidelines may have slightly different thresholds for diagnosis.

Macroalbuminuria, also known as overt albuminuria, refers to a significant increase in albumin levels in the urine. It is often associated with more advanced kidney disease and indicates a higher degree of kidney damage. The threshold for diagnosing macroalbuminuria is typically a UACR greater than 300 mg/g. At this level, the kidneys are not effectively filtering waste products, including albumin, leading to its accumulation in the urine.

It is worth mentioning that the classification of albuminuria levels is not solely based on the UACR. Other factors, such as the presence of other symptoms, medical history, and the results of additional tests, are also taken into consideration when determining the severity of kidney disease. These factors help healthcare professionals make a comprehensive assessment and develop an appropriate treatment plan.

In addition to the UACR, the 24-hour urine collection method is another way to assess albuminuria levels. Normal levels of albumin in a 24-hour urine collection are typically less than 30 mg. Levels between 30-300 mg are considered indicative of microalbuminuria, while levels greater than 300 mg suggest macroalbuminuria.

It is important to note that albuminuria levels can fluctuate throughout the day and may be influenced by various factors such as physical activity, diet, and medication. Therefore, it is recommended to perform multiple measurements over time to obtain a more accurate assessment of albuminuria levels. This longitudinal approach helps to account for any variations and provides a clearer picture of kidney function.

The classification of albuminuria levels as normal or abnormal is based on the amount of albumin present in the urine. Microalbuminuria refers to slightly elevated levels of albumin and is considered an early sign of kidney dysfunction. Macroalbuminuria indicates a significant increase in albumin levels and is associated with more advanced kidney disease. The UACR and 24-hour urine collection are commonly used methods to assess albuminuria levels. Multiple measurements over time are recommended to obtain a more accurate assessment. Understanding the classification of albuminuria levels helps healthcare professionals diagnose and monitor kidney disease, enabling timely intervention and management.

Summary

Throughout this article, we have explored the concepts of microalbuminuria and albuminuria, delving into their definitions, clinical significance, and diagnostic criteria. By understanding these terms and their implications, healthcare professionals can effectively diagnose and monitor kidney disease, enabling timely intervention and management.

Microalbuminuria is characterized by slightly elevated levels of albumin in the urine. It serves as an early sign of kidney dysfunction and can be an indicator of early-stage kidney disease. The urine albumin-to-creatinine ratio (UACR) is commonly used to determine microalbuminuria levels, with a UACR between 30-300 mg/g considered indicative of this condition. However, it is important to note that different guidelines may have slightly different thresholds for diagnosis.

On the other hand, macroalbuminuria, also known as overt albuminuria, refers to a significant increase in albumin levels in the urine. It is often associated with more advanced kidney disease and indicates a higher degree of kidney damage. A UACR greater than 300 mg/g is typically used to diagnose macroalbuminuria. At this level, the kidneys are not effectively filtering waste products, including albumin, leading to its accumulation in the urine.

It is worth mentioning that the classification of albuminuria levels is not solely based on the UACR. Other factors, such as the presence of other symptoms, medical history, and the results of additional tests, are also taken into consideration when determining the severity of kidney disease. This comprehensive assessment helps healthcare professionals develop an appropriate treatment plan tailored to the individual patient.

In addition to the UACR, the 24-hour urine collection method is another way to assess albuminuria levels. Normal levels of albumin in a 24-hour urine collection are typically less than 30 mg. Levels between 30-300 mg are considered indicative of microalbuminuria, while levels greater than 300 mg suggest macroalbuminuria. However, it is important to note that albuminuria levels can fluctuate throughout the day and may be influenced by various factors such as physical activity, diet, and medication. Therefore, performing multiple measurements over time is recommended to obtain a more accurate assessment of albuminuria levels.

In conclusion, microalbuminuria and albuminuria are important indicators of kidney function and can provide valuable insights into the presence and progression of kidney disease. Microalbuminuria serves as an early warning sign, while macroalbuminuria indicates more advanced kidney damage. The UACR and 24-hour urine collection are commonly used methods to assess albuminuria levels, but a comprehensive evaluation that considers other factors is necessary for an accurate diagnosis. By understanding the classification of albuminuria levels and utilizing appropriate diagnostic tools, healthcare professionals can effectively manage and treat kidney disease, improving patient outcomes and quality of life.

Biochemical Tests Used to Assess Renal Function

Renal function is often evaluated using two primary biochemical parameters: blood urea nitrogen (BUN) and serum creatinine. Despite their convenience, these markers prove to be less sensitive indicators of glomerular function.

Blood Urea Nitrogen (BUN)

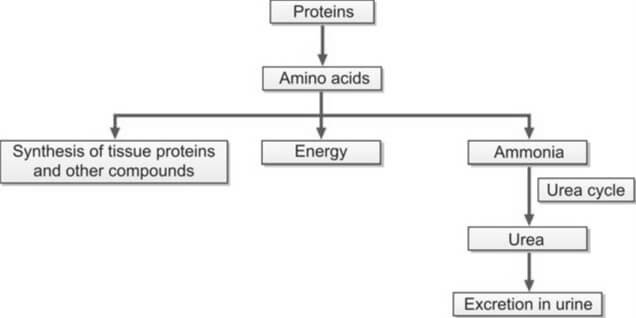

Urea originates in the liver through the conversion of amino acids, whether derived from ingested sources or tissues. Amino acids play a crucial role in energy production, protein synthesis, and are subject to catabolism, leading to the formation of ammonia. The liver, in the Krebs urea cycle, transforms this ammonia into urea. Given the toxicity of ammonia, its conversion to urea ensures safe elimination through urine excretion (refer to Figure 1).

The concentration of blood urea is commonly expressed as blood urea nitrogen (BUN), a practice rooted in older methods that exclusively assessed the nitrogen content in urea. With urea's molecular weight being 60, a gram mole of urea contains 28 grams of nitrogen. This relationship, expressed as 60/28, allows the conversion of BUN to urea by multiplying BUN by 2.14, thereby establishing the real concentration of urea as BUN × (60/28).

Glomeruli completely filter urea, and depending on an individual's hydration status, approximately 30-40% of the filtered amount is reabsorbed in the renal tubules.

The blood level of urea is susceptible to various non-renal factors, such as a high-protein diet, upper gastrointestinal hemorrhage, and liver function. Consequently, the utility of BUN as a reliable indicator of renal function is limited. Significant destruction of renal parenchyma is necessary before an elevation in blood urea can be observed.

Azotemia refers to an increase in the blood level of urea, while uremia represents the clinical syndrome resulting from this elevation. In the absence of renal function, BUN experiences a daily rise of 10-20 mg/dl.

Causes of increased BUN

- Pre-renal Azotemia: Conditions such as shock, congestive heart failure, and salt and water depletion

- Renal Azotemia: Impairment of renal function

- Post-renal Azotemia: Obstruction of the urinary tract

- Increased Rate of Urea Production:

- Adoption of a high-protein diet

- Elevated protein catabolism due to factors such as trauma, burns, or fever

- Absorption of amino acids and peptides resulting from significant gastrointestinal hemorrhage or tissue hematoma

Methods for estimation of BUN

Two methods are commonly used.

- Diacetyl Monoxime Urea Method: A direct approach involving the reaction of urea with diacetyl monoxime at high temperatures, facilitated by a strong acid and an oxidizing agent. This reaction yields a yellow diazine derivative, and the color intensity is quantified using a colorimeter or spectrophotometer.

- Urease-Berthelot Reaction: An indirect method where the enzyme urease catalyzes the separation of ammonia from the urea molecule at 37°C. The resulting ammonia is then reacted with alkaline hypochlorite and phenol in the presence of a catalyst, producing a stable color known as indophenol. The intensity of the color produced is subsequently measured at 570 nm using a spectrophotometer.

The established reference range for Blood Urea Nitrogen (BUN) in adults spans from 7 to 18 mg/dl. However, for individuals aged over 60 years, the acceptable range extends slightly, ranging from 8 to 21 mg/dl.

Serum Creatinine

Creatinine, a nitrogenous waste product, originates in muscle through the conversion of creatine phosphate. Its endogenous production correlates with muscle mass and body weight, with exogenous creatinine from meat ingestion exerting minimal influence on daily creatinine excretion.

When compared to Blood Urea Nitrogen (BUN), serum creatinine emerges as a more specific and sensitive indicator of renal function for several reasons:

- Creatinine is consistently produced by muscles at a steady rate, remaining unaffected by dietary variations, protein catabolism, or other external factors.

- Unlike BUN, creatinine is not reabsorbed, and only a minimal amount is secreted by the renal tubules.

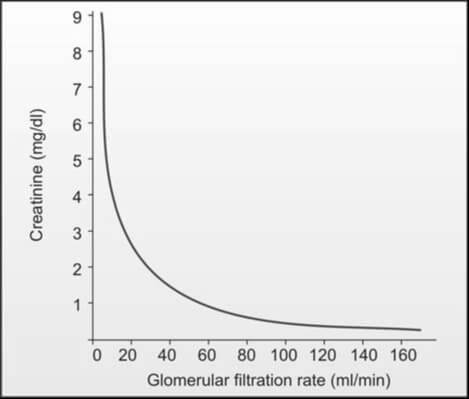

While an increased creatinine level reflects a reduction in glomerular filtration rate when muscle mass is constant, the manifestation of elevated serum creatinine levels (e.g., from 1.0 mg/dl to 2.0 mg/dl) in blood is delayed until about 50% of kidney function is lost, owing to significant kidney reserve. Consequently, serum creatinine proves less sensitive in detecting early renal impairment. It's important to note that a laboratory report indicating serum creatinine within the normal range does not necessarily denote normalcy; the level should be correlated with the individual's body weight, age, and sex. In the absence of renal function, serum creatinine rises by 1.0 to 1.5 mg/dl per day (refer to Figure 2).

Causes of Increased Serum Creatinine Level

- Pre-renal, renal, and post-renal azotemia

- High intake of dietary meat

- Presence of active acromegaly and gigantism

Causes of Decreased Serum Creatinine Level

- Pregnancy

- Increasing age (reduction in muscle mass)

Methods for Estimation of Serum Creatinine

The assay for serum creatinine stands out for its cost-effectiveness, widespread availability, and simplicity in execution. Two commonly employed methods are as follows:

- Jaffe’s Reaction (Alkaline Picrate Reaction): This method holds prominence as the most widely used. In an alkaline solution, creatinine reacts with picrate, yielding a spectrophotometric response at 485 nm. Notably, certain plasma components like glucose, protein, fructose, ascorbic acid, acetoacetate, acetone, and cephalosporins exhibit a similar reaction with picrate, collectively termed non-creatinine chromogens. Their interaction can lead to a false elevation of serum creatinine levels, resulting in a 'true' creatinine value that is understated by 0.2 to 0.4 mg/dl when assessed through Jaffe’s reaction.

- Enzymatic Methods: This alternative approach employs enzymes that catalyze the cleavage of creatinine. Subsequent to the production of hydrogen peroxide, its reaction with phenol and a dye generates a colored product, measurable through spectrophotometry.

Reference Range

- Adult males: 0.7-1.3 mg/dl

- Adult females: 0.6-1.1 mg/dl

Relying solely on serum creatinine for the evaluation of renal function is not recommended. The concentration of serum creatinine is influenced by factors such as age, sex, muscle mass, glomerular filtration, and the extent of tubular secretion. Consequently, the normal range for serum creatinine is broad. The elevation of serum creatinine becomes apparent when the glomerular filtration rate (GFR) falls below 50% of the normal level. Even a minor increase in serum creatinine is indicative of a significant reduction in GFR, as illustrated in Figure 2. Consequently, the early stages of chronic renal impairment cannot be effectively identified through the measurement of serum creatinine alone.

BUN/Serum Creatinine Ratio

Clinicians commonly calculate BUN/creatinine ratio as a diagnostic tool to differentiate pre-renal and post-renal azotemia from renal azotemia. The standard range for this ratio is 12:1 to 20:1.

Causes of Increased BUN/Creatinine Ratio (>20:1):

- Elevated BUN with normal serum creatinine:

- Pre-renal azotemia (resulting from reduced renal perfusion)

- High protein diet

- Increased protein catabolism

- Gastrointestinal hemorrhage

- Elevation of both BUN and serum creatinine with a disproportionately greater increase in BUN:

- Post-renal azotemia (caused by obstruction to urine outflow)

- Obstruction to urinary outflow induces the diffusion of urinary urea back into the bloodstream from tubules due to increased backpressure.

Causes of Decreased BUN/Creatinine Ratio (<10:1)

- Acute tubular necrosis

- Low protein diet and starvation

- Severe liver disease

Clearance Tests to Measure Glomerular Filtration Rate (GFR)

Glomerular filtration rate (GFR) represents the rate in ml/min at which a substance is effectively cleared from the bloodstream by the glomeruli. The evaluative measure of the glomeruli's ability to filter a substance from the blood is conducted through clearance studies. If a substance is unbound to plasma proteins, undergoes complete filtration by the glomeruli, and experiences neither tubular secretion nor reabsorption, its clearance rate aligns with the glomerular filtration rate.

The clearance of a substance denotes the volume of plasma entirely purged of that substance per minute, calculated using the formula:

Clearance = UV⁄P

Here, U signifies the concentration of the substance in urine in mg/dl; V denotes the volume of excreted urine in ml/min; and P represents the concentration of the substance in plasma in mg/dl. Given that U and P share the same units, they mutually nullify, rendering the clearance value expressed in the same unit as V, i.e., ml/min. All clearance values are standardized to a standard body surface area of 1.73 m2.

The substances employed for gauging glomerular filtration rate (GFR) encompass:

- Exogenous: Inulin, Radiolabelled ethylenediamine tetraacetic acid (51Cr- EDTA), 125I-iothalamate

- Endogenous: Creatinine, Urea, Cystatin C

The selected agent for GFR measurement should exhibit the following properties: (1) Physiological inertness, preferably endogenous nature, (2) Unrestricted filtration by glomeruli without reabsorption or secretion by renal tubules, (3) No binding to plasma proteins and resistance to renal metabolism, and (4) Sole excretion by the kidneys. However, an entirely ideal endogenous agent remains elusive.

Conducting clearance tests proves to be intricate, costly, and not readily accessible. A significant challenge in clearance studies lies in the potential for incomplete urine collection.

Anomalous clearance patterns manifest in: (i) pre-renal factors such as diminished blood flow due to shock, dehydration, and congestive cardiac failure; (ii) renal diseases; and (iii) obstruction in urinary outflow.

Inulin Clearance

Inulin, an inert plant polysaccharide (a fructose polymer), undergoes glomerular filtration without reabsorption or secretion by the renal tubules, rendering it an ideal agent for GFR measurement. The procedure involves administering a bolus dose of inulin (25 ml of 10% solution IV), followed by a constant intravenous infusion (500 ml of 1.5% solution at a rate of 4 ml/min). Timed urine samples are collected, and blood samples are obtained at the midpoint of the timed urine collection. Widely recognized as the 'gold standard' or reference method for GFR estimation, this test is seldom employed due to its time-consuming nature, high cost, the requirement for continuous intravenous inulin infusion to maintain a steady plasma level, and challenges in laboratory analysis. The average inulin clearance is 125 ml/min/1.73 m2 for males and 110 ml/min/1.73 m2 for females. Clearance tends to be lower in children under 2 years and older adults. Primarily reserved for clinical research, this test is not commonly utilized in routine clinical practice.

Clearance of Radiolabeled Agents

Urinary clearance of radiolabeled iothalamate (125Iiothalamate) exhibits a close correlation with inulin clearance. Nevertheless, this technique is associated with high costs and potential exposure to radioactive substances. Alternative radiolabeled substances employed for similar purposes include 51Cr-EDTA and 99Tc-DTPA.

Cystatin C Clearance

Cystatin C, a cysteine protease inhibitor with a molecular weight of 13,000, is consistently synthesized by all nucleated cells at a constant rate. Unbound to proteins, it undergoes free filtration by glomeruli and is not reabsorbed into circulation post-filtration. Demonstrating greater sensitivity and specificity for impaired renal function than plasma creatinine, cystatin C serves as a marker unaffected by factors like sex, diet, or muscle mass. Many consider cystatin C superior to creatinine clearance as an estimator of GFR. Its measurement is typically conducted through immunoassay techniques.

Creatinine Clearance

The most widely employed method for assessing GFR is through creatinine clearance testing.

Creatinine, a continuous byproduct of muscle creatine, undergoes complete filtration by glomeruli and experiences negligible reabsorption by tubules, with a minor portion being tubularly secreted.

A 24-hour urine sample is the preferred collection method, mitigating issues related to diurnal variations in creatinine excretion and enhancing collection accuracy.

The procedure involves discarding the initial morning void and subsequently collecting all subsequent urine in the provided container. The next morning, the first voided urine is also collected, and the container is submitted to the laboratory. Simultaneously, a blood sample is drawn at the midpoint of the urine collection period to estimate plasma creatinine levels. Creatinine clearance is calculated using the following parameters: (1) creatinine concentration in urine in mg/ml (U), (2) volume of urine excreted in ml/min (V) – calculated as the volume of urine collected per the collection time in minutes (e.g., volume of urine collected in 24 hours ÷ 1440), and (3) creatinine concentration in plasma in mg/dl (P). The resulting creatinine clearance in ml/min per 1.73 m2 is derived from the formula UV/P.