Displaying items by tag: Diseases and Disorders

CHOLERA: CAUSES, SYMPTOMS, DIAGNOSIS AND TREATMENT

CHOLERA is a specific infectious disease that affects the lower portion of the intestine and is characterized by violent purging, vomiting, muscular cramp, suppression of urine and rapid collapse. It can a terrifying disease with massive diarrhea. The patient’s fluid losses are enormous every day with severe rapid dehydration, death comes within hours.

Total Thyroxine (T4)

Thyroxine, also recognized as T4, is a vital hormone produced by the thyroid gland. It plays a pivotal role in regulating metabolic processes and fostering growth throughout the body.

The presence of T4 in the bloodstream can be categorized into two distinct forms:

- Total T4: This encompasses the entire quantity of thyroxine present in the blood. It includes both the 'bound' T4, which is attached to proteins, and the 'free' T4. The bound T4 is inactive as it is attached to proteins, preventing it from entering body tissues.

- Free T4: This refers exclusively to the unbound portion of T4 that is not attached to proteins. Free T4 is the metabolically active form of the hormone, capable of entering body tissues to exert its effects.

A T4 test is a diagnostic tool that measures the concentration of T4 in your blood. Abnormal levels, either too high or too low, can be indicative of thyroid disease. The measurement of Free T4 is particularly crucial as it reflects the active form of thyroxine hormone available for tissue uptake, thereby providing a more accurate reflection of thyroid function.

The quantification of total serum thyroxine, which encompasses both the free and protein-bound forms, is typically achieved through the application of competitive immunoassay techniques. The standard range for adults is established between 5.0 and 12.0 μg/dl.

It is common practice to conduct a combined analysis of either total or free thyroxine alongside Thyroid Stimulating Hormone (TSH) levels. This combined approach offers the most comprehensive evaluation of thyroid functionality.

Causes of Increased Total T4

- Hyperthyroidism: Primary hyperthyroidism is suggested by the concurrent rise in both T4 and T3 levels, accompanied by a decrease in TSH.

- Augmented Thyroxine-Binding Globulin: An increase in TBG concentration leads to a decrease in free hormone levels, which in turn stimulates the release of TSH from the pituitary, restoring the free hormone concentration to normal. The reverse process occurs if the concentration of binding proteins decreases. In both scenarios, the level of free hormones remains within the normal range, while the concentration of total hormone undergoes changes. Consequently, estimating only the total T4 concentration can lead to misinterpretation of results in situations that alter the concentration of TBG.

- Factitious Hyperthyroidism

- Tumors Secreting TSH in the Pituitary Gland

Causes of Decreased Total T4

- Primary Hypothyroidism: Primary hypothyroidism is characterized by a decrease in T4 levels coupled with an increase in TSH.

- Secondary or Pituitary Hypothyroidism

- Tertiary or Hypothalamic Hypothyroidism

- Hypoproteinemia: This condition, exemplified by the nephrotic syndrome, can lead to a decrease in total T4.

- Pharmaceutical Influence: Certain drugs, such as oestrogen and danazol, can contribute to reduced total T4 levels.

- Severe Non-Thyroidal Illness

Free Thyroxine (FT4)

Free thyroxine (FT4) represents a minuscule fraction of total T4, remains unbound to proteins, and is the metabolically active variant of the hormone. It accounts for approximately 0.05% of total T4. The standard range is 0.7 to 1.9 ng/dl. The concentrations of free hormones (FT4 and FT3) align more accurately with the metabolic state than total hormone levels, as they remain unaffected by fluctuations in TBG concentrations.

The assessment of FT4 proves beneficial in scenarios where the total T4 level is likely to be modified due to changes in TBG level, such as during pregnancy, intake of oral contraceptives, or in the presence of nephrotic syndrome.

Total and Free Triiodothyronine (T3)

Applications

- Diagnosis of T3 Thyrotoxicosis: A condition characterized by hyperthyroidism with diminished TSH and elevated T3, and normal T4/FT4 levels is referred to as T3 thyrotoxicosis.

- Early Detection of Hyperthyroidism: In the initial stages of hyperthyroidism, total T4 and free T4 levels remain within the normal range, but T3 levels are elevated.

A low T3 level does not contribute significantly to the diagnosis of hypothyroidism as it is observed in approximately 25% of healthy individuals.

For routine evaluation of thyroid function, TSH and T4 are measured. T3 is not routinely estimated due to its very low normal plasma levels.

The standard T3 level is 80-180 ng/dl.

Free T3: The measurement of free T3 provides accurate values in patients with altered serum protein levels, such as during pregnancy, intake of estrogens or oral contraceptives, and in the presence of nephrotic syndrome. It represents 0.5% of total T3.

Thyrotropin Releasing Hormone (TRH) Stimulation Test

Applications

- Confirmation of Secondary Hypothyroidism Diagnosis

- Evaluation of Suspected Hypothalamic Disease

- Suspected Hyperthyroidism

This test is not frequently used in current times due to the availability of sensitive TSH assays.

Procedure

- A baseline blood sample is collected for the estimation of basal serum TSH level.

- TRH is administered intravenously (200 or 500 μg), followed by the measurement of serum TSH at 20 and 60 minutes.

Interpretation

- Normal Response: A rise of TSH > 2 mU/L at 20 minutes, followed by a slight decline at 60 minutes.

- Exaggerated Response: A further significant rise in an already elevated TSH level at 20 minutes, followed by a slight decrease at 60 minutes; observed in primary hypothyroidism.

- Flat Response: No response; observed in secondary (pituitary) hypothyroidism.

- Delayed Response: TSH is higher at 60 minutes compared to its level at 20 minutes; seen in tertiary (hypothalamic) hypothyroidism.

Antithyroid Antibodies

Box 1: Thyroid autoantibodies

- Useful for diagnosis and monitoring of autoimmune thyroid diseases.

- Antimicrosomal or antithyroid peroxidase antibodies: Hashimoto’s thyroiditis

- Anti-TSH receptor antibodies: Graves’ disease

In thyroid disorders such as Hashimoto’s thyroiditis and Graves’ disease, various autoantibodies, including TSH receptor, antimicrosomal, and antithyroglobulin, are detected. In almost all patients with Hashimoto’s disease, antimicrosomal (also known as thyroid peroxidase) and anti-thyroglobulin antibodies are observed. TSH receptor antibodies (TRAb) are primarily tested in Graves’ disease to predict the outcome post-treatment.

Radioactive Iodine Uptake (RAIU) Test

This direct test evaluates the trapping of iodide by the thyroid gland (through the iodine symporters or pumps in follicular cells) for thyroid hormone synthesis. Patients are administered a tracer dose of radioactive iodine (either 131I or 123I) orally. This is followed by the measurement of the amount of radioactivity over the thyroid gland at intervals of 2 to 6 hours and again at 24 hours. RAIU directly correlates with the functional activity of the thyroid gland. The normal RAIU is about 10-30% of the administered dose at 24 hours, but this varies according to geographic location due to differences in dietary intake.

Causes of Increased Uptake

Hyperthyroidism due to Graves’ disease, toxic multinodular goiter, toxic adenoma, TSH-secreting tumor.

Causes of Decreased Uptake

Hyperthyroidism due to administration of thyroid hormone, factitious hyperthyroidism, subacute thyroiditis.

Uses

RAIU is most useful in the differential diagnosis of hyperthyroidism by distinguishing causes into those due to increased uptake and those due to decreased uptake.

Thyroid Scintiscanning

An isotope (99mTc-pertechnetate) is administered and a gamma counter assesses its distribution within the thyroid gland.

Interpretation

- Differential diagnosis of high RAIU thyrotoxicosis:

- Graves’ disease: Uniform or diffuse increase in uptake

- Toxic multinodular goiter: Multiple discrete areas of increased uptake

- Adenoma: Single area of increased uptake

- Evaluation of a solitary thyroid nodule:

- ‘Hot’ nodule: Hyperfunctioning

- ‘Cold’ nodule: Non-functioning; about 20% of cases are malignant.

The interpretation of thyroid function tests is shown in Table 1.

| Test results | Interpretations |

|---|---|

| TSH Normal, FT4 Normal | Euthyroid |

| Low TSH, Low FT4 | Secondary hypothyroidism |

| High TSH, Normal FT4 | Subclinical hypothyroidism |

| High TSH, Low FT4 | Primary hypothyroidism |

| Low TSH, Normal FT4, Normal FT3 | Subclinical hyperthyroidism |

| Low TSH, Normal FT4, High FT3 | T3 toxicosis |

| Low TSH, High FT4 | Primary hyperthyroidism |

Neonatal Screening for Hypothyroidism

During the neonatal phase, a deficiency in thyroid hormones can lead to severe mental impairment, a condition known as cretinism. This can be averted through prompt detection and intervention. The Thyroid Stimulating Hormone (TSH) level is typically assessed using dry blood spots on filter paper or cord serum, collected between the 3rd and 5th days of life. An elevated TSH level is indicative of hypothyroidism. For infants diagnosed with hypothyroidism, a Radioactive Iodine Uptake (RAIU) scan using 123I should be performed to differentiate between thyroid agenesis and dyshormonogenesis.

Disorders of Thyroid Gland: Hypothyroidism & Hyperthyroidism

Within the spectrum of endocrine disorders, thyroid-related conditions are prevalent and are second only to diabetes mellitus in terms of frequency. These disorders exhibit a higher incidence in females compared to males. Functional disorders of the thyroid can be bifurcated into two categories based on the gland’s activity level: hypothyroidism, characterized by a deficiency of thyroid hormones, and hyperthyroidism, marked by an overproduction of thyroid hormones. Each of these conditions can stem from a multitude of causes.

Thyroid disease encompasses a variety of conditions that affect the functionality of the thyroid gland, a crucial component of the endocrine system. This butterfly-shaped gland, nestled at the front of the neck, plays a pivotal role in regulating numerous physiological processes by synthesizing and releasing thyroid hormones, namely thyroxine (T4) and triiodothyronine (T3).

Thyroid disease also encompasses other conditions such as thyroiditis, an inflammatory condition of the thyroid gland; thyroid nodules, which are abnormal growths or lumps on the thyroid gland; goiter, a condition marked by an enlarged thyroid gland; and thyroid cancer.

The proper functioning of the thyroid is integral to the body's overall well-being. Anomalies in thyroid function can have far-reaching effects on various bodily functions, including metabolism, cognitive development, bone health, heart rate regulation, mood stability, and energy levels. Despite the challenges posed by thyroid diseases, they are typically manageable and often treatable with medication, offering a promising prognosis for those affected.

An enlargement of the thyroid gland is referred to as a goiter. The terminology associated with thyroid disorders is delineated in Box 1.

Box 1: Terminology in thyroid disorders

- Primary hyper-/hypothyroidism: Increased or decreased function of thyroid gland due to disease of thyroid itself and not due to increased or decreased levels of TRH or TSH.

- Secondary hyper-/hypothyroidism: Increased or decreased function of thyroid gland due to increased or decreased levels of TSH.

- Tertiary hypothyroidism: Decreased function of thyroid gland due to decreased function of hypothalamus.

- Subclinical thyroid disease: A condition with abnormality of thyroid hormone levels in blood but without specific clinical manifestations of thyroid disease and without any history of thyroid dysfunction or therapy.

- Subclinical hyperthyroidism: A condition with normal thyroid hormone levels but with low or undetectable TSH level.

- Subclinical hypothyroidism: A condition with normal thyroxine and triiodothyronine level along with mildly elevated TSH level.

Hyperthyroidism

Hyperthyroidism is a medical condition precipitated by the overproduction of thyroid hormone. The etiological factors contributing to hyperthyroidism encompass the following:

- Graves' disease, also known as Diffuse Toxic Goiter

- Toxic manifestations in Multinodular Goiter

- Toxicity associated with Adenoma

- Subacute Thyroiditis

- TSH-secreting Pituitary Adenoma, leading to Secondary Hyperthyroidism

- Trophoblastic tumors that secrete a TSH-like hormone, such as Choriocarcinoma and Hydatidiform Mole

- Factitious Hyperthyroidism

Clinical Characteristics

The clinical manifestations of hyperthyroidism encompass a range of symptoms including nervousness, anxiety, irritability, and insomnia, along with the presence of fine tremors. Despite maintaining a normal or even increased appetite, individuals may experience weight loss. Additional symptoms include intolerance to heat, increased perspiration, and dyspnea upon exertion. Reproductive issues such as amenorrhea and infertility may also be present. Cardiovascular symptoms can include palpitations, tachycardia, cardiac arrhythmias, and in elderly patients, heart failure. Musculoskeletal symptoms may manifest as muscle weakness, proximal myopathy, and osteoporosis, particularly in elderly individuals.

Graves' disease, a specific form of hyperthyroidism, is characterized by a triad of symptoms: hyperthyroidism itself, ophthalmopathy (manifesting as exophthalmos, lid retraction, lid lag, corneal ulceration, and impaired eye muscle function), and dermopathy, specifically pretibial myxoedema.

Box 2: Thyroid function tests in hyperthyroidism

- Thyrotoxicosis:

- Serum TSH low or undetectable

- Raised total T4 and free T4.

- T3 toxicosis:

- Serum TSH undetectable

- Normal total T4 and free T4

- Raised T3

Laboratory Features

In the majority of patients, there is an observed elevation in the levels of free serum T3 and T4. In instances of T3 thyrotoxicosis, which accounts for 5% of thyrotoxicosis cases, serum T4 levels remain within normal parameters while T3 levels are elevated. Serum TSH levels are either low or undetectable (less than 0.1 mU/L) as indicated in Box 2.

A condition known as subclinical hyperthyroidism is characterized by undetectable or low serum TSH in conjunction with normal T3 and T4 levels. This condition may present with subtle signs and symptoms of thyrotoxicosis, although this is not always the case. Subclinical hyperthyroidism carries associated risks, including atrial fibrillation, osteoporosis, and the potential progression to overt thyroid disease.

A comparative analysis of the features of primary and secondary hyperthyroidism is presented in Table 1.

| Parameter | Primary hyperthyroidism | Secondary hyperthyroidism |

|---|---|---|

| Serum TSH | Low | Normal or high |

| Serum free thyroxine | High | High |

| TSH receptor antibodies | May be positive | Negative |

| Causes | Graves’ disease, toxic multinodular goiter, toxic adenoma | Pituitary adenoma |

Evaluation of hyperthyroidism is presented in Flowchart 1.

- TSH, FT4

- Low TSH, high FT4

- Primary hyperthyroidism

- TRAb and isotope thyroid scan

- TRAb +ve, Diffuse uptake

- Graves' disease

- TRAb -ve, Nodular uptake

- Toxic adenoma

- TRAb -ve, Irregular uptake

- Toxic multinodular goiter

- TRAb +ve, Diffuse uptake

- TRAb and isotope thyroid scan

- Primary hyperthyroidism

- Low TSH, normal FT4

- Measure FT3

- Normal

- *Subclinical or mild hyperthyroidism

*Non-thyroidal illness

*Drugs (Dopamine, steroids, amiodarone)

- *Subclinical or mild hyperthyroidism

- High

- T3 thyrotoxicosis

- Normal

- Measure FT3

- High TSH, high FT4

- *Pituitary-secreting adenoma (Secondary hyerthroidism)

*Thyroid hormone resistance- TRH test

- Increased response

- Resistance to thyroid hormone

- No response

- Pituitary adenoma

- Increased response

- TRH test

- *Pituitary-secreting adenoma (Secondary hyerthroidism)

- Low TSH, high FT4

Hypothyroidism

Hypothyroidism is a condition caused by deficiency of thyroid hormones. Causes of hypothyroidism are listed below.

- Primary hypothyroidism (Increased TSH)

- Iodine deficiency

- Hashimoto’s thyroiditis

- Exogenous goitrogens

- Iatrogenic: surgery, drugs, radiation

- Secondary hypothyroidism (Low TSH): Diseases of pituitary

- Tertiary hypothyroidism (Low TSH, Low TRH): Diseases of hypothalamus

Primary hypothyroidism is characterized by an insufficiency in thyroid hormone production, which is not attributable to disorders of the hypothalamus or pituitary gland. Secondary hypothyroidism, on the other hand, arises from a deficiency in the secretion of Thyroid Stimulating Hormone (TSH) from the pituitary gland. A deficiency or loss of secretion of thyrotropin-releasing hormone from the hypothalamus results in what is known as tertiary hypothyroidism. Both secondary and tertiary hypothyroidism are significantly less common than primary hypothyroidism. Plasma TSH levels are elevated in primary hypothyroidism and reduced in secondary and tertiary hypothyroidism. The distinctions between primary and secondary hypothyroidism are delineated in Table 2.

| Parameter | Primary hypothyroidism | Secondary hypothyroidism |

|---|---|---|

| Cause | Hashimoto’s thyroiditis | Pituitary disease |

| Serum TSH | High | Low |

| Thyrotropin releasing hormone stimulation test | Exaggerated response | No response |

| Antimicrosomal antibodies | Present | Absent |

Box 3: Thyroid function tests in hypothyroidism

- Primary hypothyroidism

- Serum TSH: Increased (proportional to degree of hypofunction)

- Free T4: Decreased

- TRH stimulation test: Exaggerated response

- Secondary hypothyroidism

- Serum TSH: Decreased

- Free T4: Decreased

- TRH stimulation test: Absent response

- Tertiary hypothyroidism

- Serum TSH: Decreased

- FT4: Decreased

- TRH stimulation test: Delayed response

The clinical manifestations of primary hypothyroidism include lethargy, mild depression, menstrual irregularities, weight gain, intolerance to cold, dry skin, myopathy, constipation, and a firm and lobulated thyroid gland, a characteristic feature of Hashimoto’s thyroiditis.

In severe instances, a condition known as myxoedema coma may occur. This advanced stage is marked by stupor, hypoventilation, and hypothermia.

Laboratory Indicators

The laboratory indicators associated with hypothyroidism are detailed in Box 3.

A condition known as subclinical hypothyroidism is characterized by normal serum thyroxine (T4 and FT4) levels in conjunction with a moderately elevated TSH level (greater than 10 mU/L). This condition is associated with adverse obstetrical outcomes, impaired cognitive development in children, and an increased risk of hypercholesterolemia and progression to overt hypothyroidism.

The evaluation process for hypothyroidism is depicted in Flowchart 2.

- TSH, FT4

- High TSH, low FT4

- Primary hypothyroidism

- Thyroid microsomal antibody

- Increased

- Hashimoto's thyroiditis

- Normal

- *Congenital T4 synthesis deficiency

*Iodine deficiency

- *Congenital T4 synthesis deficiency

- Increased

- Thyroid microsomal antibody

- Primary hypothyroidism

- High TSH, normal FT4

- Subclinical hypothyroidism

- Low TSH, low FT4

- Secondary or tertiary hypothyroidism

- TRH stimulation test

- Little or no TSH response

- Secondary (Pituatary) hypothyroidism

- Delayed TSH response

- Tertiary (Hypothalamic) hypothyroidism

- Little or no TSH response

- TRH stimulation test

- Secondary or tertiary hypothyroidism

- High TSH, low FT4

Female Infertility: Causes and Investigations

Female infertility is a condition characterized by the inability to conceive after 12 months of regular unprotected intercourse, or after 6 months if the woman is over 35. It affects millions globally, impacting individuals, families, and communities. Infertility can be attributed to female factors, male factors, or a combination of both in about two-thirds of cases, with the remaining cases having unknown causes. The primary symptom is the inability to become pregnant. Factors contributing to female infertility include abnormalities in the ovaries, uterus, fallopian tubes, and endocrine system. A menstrual cycle that’s too long (over 35 days), too short (less than 21 days), irregular, or absent may indicate a lack of ovulation, a common cause of infertility. Successful pregnancy requires each step of the human reproduction process to function correctly.

Female Reproductive System

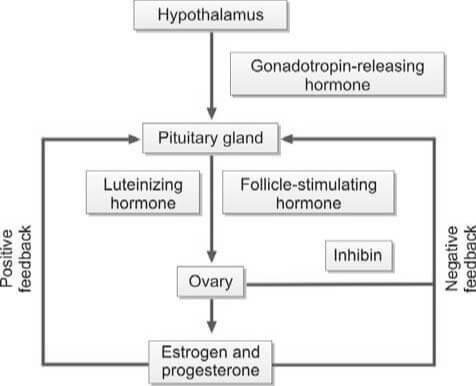

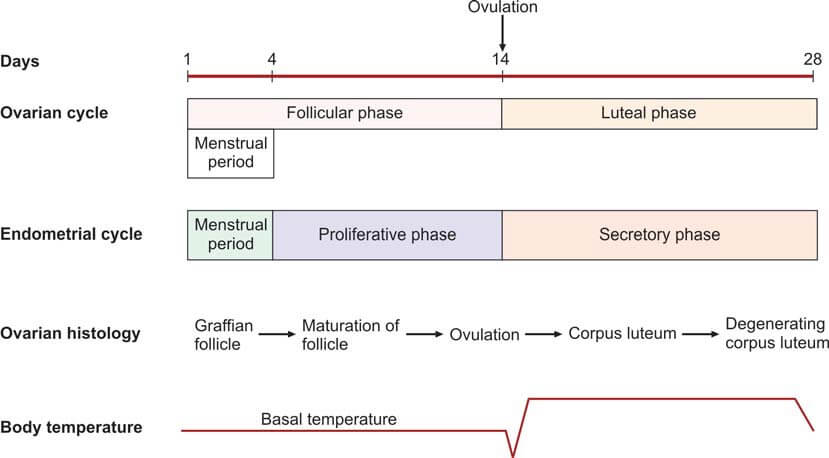

The ovaries serve as the production sites for female gametes, also known as ova, through a process called oogenesis. These ova are cyclically released at regular intervals through ovulation. Each ovary houses a multitude of follicles, each containing ova at different developmental stages.

During each menstrual cycle, up to 20 primordial follicles are activated for maturation. However, only one follicle reaches full maturity. This dominant follicle then ruptures to release the secondary oocyte from the ovary. The maturation of the follicle is stimulated by the follicle-stimulating hormone (FSH), which is secreted by the anterior pituitary gland.

As the follicle matures, it secretes estrogen, leading to the proliferation of the endometrium of the uterus, known as the proliferative phase. The follicular cells also secrete inhibin, which regulates the release of FSH by the anterior pituitary. A decrease in FSH levels triggers the secretion of luteinizing hormone (LH) by the anterior pituitary, known as the LH surge. This surge causes the follicle to rupture, expelling the ovum into the peritoneal cavity near the fimbrial end of the fallopian tube.

The fallopian tubes serve as conduits for the ova, transporting them from the ovaries to the uterus. The fertilization of the ovum by the sperm typically occurs within the fallopian tube.

The ovum is composed of the secondary oocyte, the zona pellucida, and the corona radiata. When the follicle in the ovary ruptures, it collapses and fills with a blood clot, forming the corpus luteum. Luteinizing hormone (LH) transforms granulosa cells in the follicle into lutein cells, which begin to secrete progesterone.

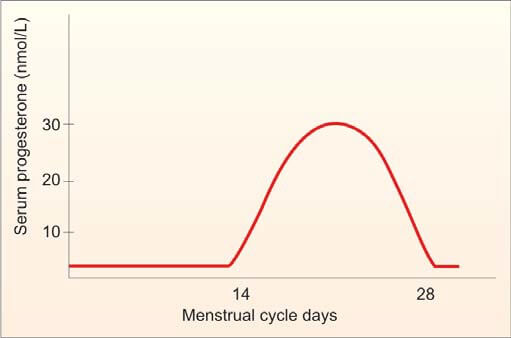

Progesterone stimulates secretion from the endometrial glands, marking the secretory phase that was previously influenced by estrogen. As progesterone levels rise, LH production from the anterior pituitary is inhibited. Without LH, the corpus luteum regresses and becomes the non-functional corpus albicans. Following the regression of the corpus luteum, the production of estrogen and progesterone ceases, and the endometrium collapses, initiating menstruation.

If the ovum is fertilized and implants in the uterine wall, the developing placenta secretes human chorionic gonadotropin (hCG) into the maternal circulation. hCG maintains the corpus luteum for the secretion of estrogen and progesterone until the 12th week of pregnancy. After the 12th week, the corpus luteum regresses to the corpus albicans, and the placenta takes over the synthesis of estrogen and progesterone until childbirth.

The average duration of a normal menstrual cycle is 28 days, with ovulation typically occurring around the 14th day of the cycle. The interval between ovulation and menstruation, known as the luteal phase, is fairly constant at 14 days.

Factors Contributing to Female Infertility

The following are the primary causes of female infertility:

- Dysfunction of the Hypothalamic-Pituitary Axis:

- Hypothalamic Factors:

- Overexertion through exercise

- High levels of stress

- Underweight conditions

- Kallman's syndrome

- Unexplained causes (Idiopathic)

- Pituitary Factors:

- Hyperprolactinemia

- Hypopituitarism (manifestations include Sheehan's syndrome, Simmond's disease)

- Craniopharyngioma

- Exposure to cerebral irradiation

- Hypothalamic Factors:

- Ovarian Dysfunction:

- Polycystic Ovarian Syndrome (Stein-Leventhal syndrome)

- Luteinized Unruptured Follicle

- Turner's syndrome

- Exposure to radiation or chemotherapy

- Surgical removal of ovaries

- Unexplained causes (Idiopathic)

- Impairment of the Reproductive Tract:

- Fallopian Tubes:

- Infections such as Tuberculosis, Gonorrhea, and Chlamydia

- History of surgical procedures (e.g., laparotomy)

- Tubectomy

- Congenital conditions like hypoplasia or non-canalization

- Endometriosis

- Uterus:

- Uterine malformations

- Asherman's syndrome

- Tuberculous endometritis

- Fibroids

- Cervix: Presence of sperm antibodies

- Vagina: Septum

- Fallopian Tubes:

- Sexual Dysfunction: Dyspareunia

Investigations

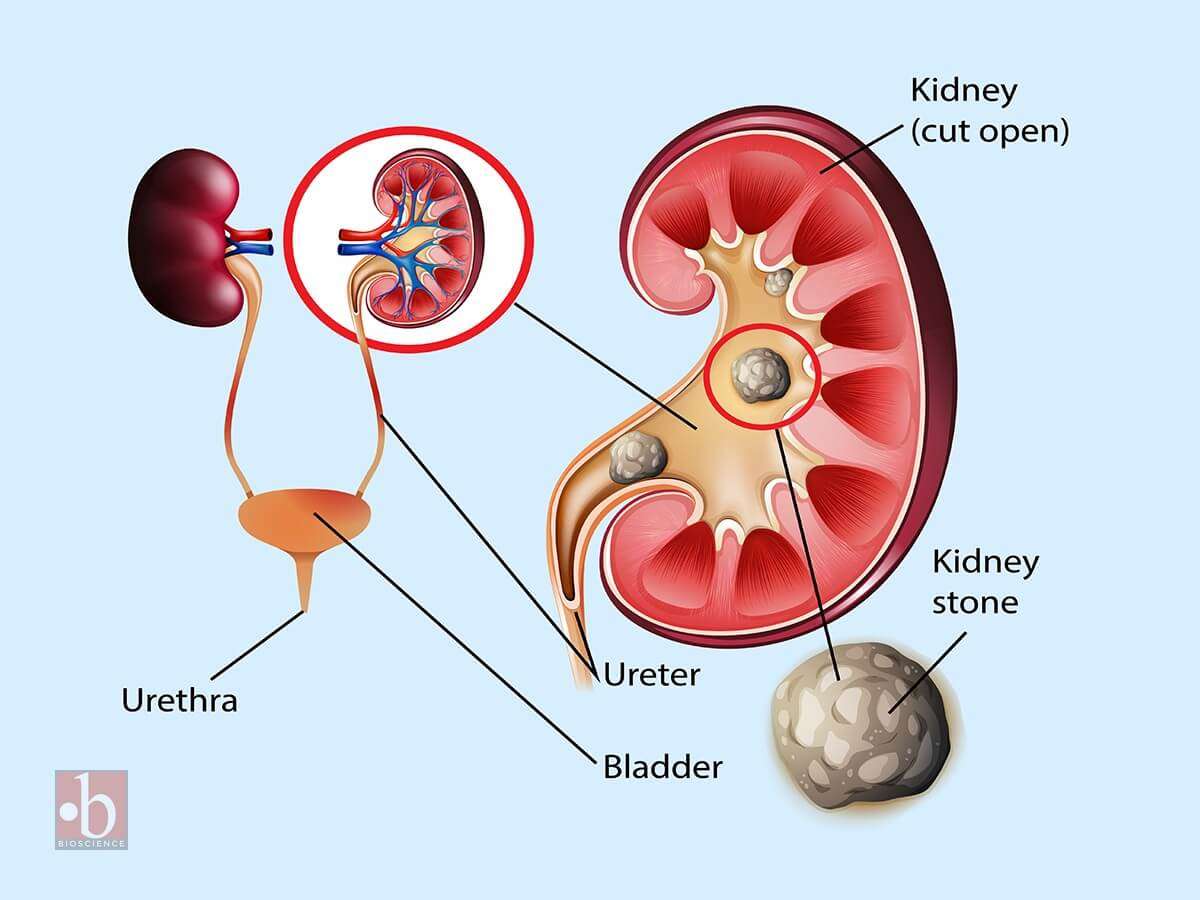

Evaluation of female infertility is shown in Flowchart 2.

Ovulation Testing Methods

Anovulation is the most prevalent cause of female infertility.

- Indicators of Ovulatory Cycles: Regular menstrual cycles, mastalgia, and the direct visualization of the corpus luteum through laparoscopy suggest ovulatory cycles. Anovulatory cycles are clinically manifested by amenorrhea, oligomenorrhea, or irregular menstruation. However, anovulation may still occur in seemingly regular cycles.

- Endometrial Biopsy: This procedure is performed during the premenstrual period (21st-23rd day of the cycle). The presence of a secretory endometrium during the latter half of the cycle serves as evidence of ovulation.

- Ultrasonography (USG): Serial ultrasonography begins from the 10th day of the cycle, measuring the size of the dominant follicle. A size greater than 18 mm suggests imminent ovulation. The collapse of the follicle and the presence of a few milliliters of fluid in the pouch of Douglas indicate ovulation. USG is also useful for treatment purposes (i.e., timing of coitus or intrauterine insemination) and diagnosing a luteinized unruptured follicle (absence of dominant follicle collapse). Transvaginal USG is more sensitive than abdominal USG.

- Basal Body Temperature (BBT): The patient measures her oral temperature at the same time every morning before getting up. A drop of about 0.5°F at the time of ovulation is observed. During the second (progestational) half of the cycle, the temperature slightly rises above the preovulatory level (increase of 0.5° to 1°F). This rise is due to the slight pyrogenic action of progesterone and thus serves as presumptive evidence of a functional corpus luteum.

- Cervical Mucus Study:

- Fern Test: During the estrogenic phase, a characteristic fern pattern appears when cervical mucus is spread on a glass slide. This ferning disappears after the 21st day of the cycle. If previously observed, its disappearance is presumptive evidence of corpus luteum activity.

- Spinnbarkeit Test: Cervical mucus is elastic and can stretch up to a distance of over 10 cm. This phenomenon, known as Spinnbarkeit or the thread test, indicates estrogen activity. During the secretory phase, the viscosity of the cervical mucus increases, and it fractures when stretched. This change in cervical mucus is evidence of ovulation.

- Vaginal Cytology: The Karyopyknotic Index (KI) is high during the estrogenic phase and decreases in the secretory phase. This index refers to the percentage of superficial squamous cells with pyknotic nuclei to all mature squamous cells in a lateral vaginal wall smear. Typically, a minimum of 300 cells are evaluated. The peak KI usually corresponds with the time of ovulation and may reach up to 50 to 85.

- Estimation of Progesterone in Mid-Luteal Phase (day 21 or 7 days before expected menstruation): A progesterone level greater than 10 nmol/L is a reliable indicator of ovulation if cycles are regular. An improperly timed sample is a common cause of abnormal results.

Diagnostic Procedures for Anovulation

The following tests are performed to identify the cause of anovulation:

- Measurement of LH, FSH, and Estradiol Levels (Days 2 to 6): In cases of hypogonadotropic hypogonadism, which is a result of hypothalamic or pituitary failure, all these values are typically low.

- Measurement of TSH, Prolactin, and Testosterone Levels (If Cycles are Irregular or Absent):

- Increased TSH Levels: This is indicative of hypothyroidism.

- Increased Prolactin Levels: This could suggest the presence of a pituitary adenoma.

- Increased Testosterone Levels: This could be a sign of Polycystic Ovarian Disease (PCOD) or Congenital Adrenal Hyperplasia. To differentiate between PCOD and Congenital Adrenal Hyperplasia, an ultrasound and estimation of dihydroepiandrosterone (DHEA) are performed.

- Transvaginal Ultrasonography: This procedure is conducted to detect the presence of PCOD.

Evaluating Tubal and Uterine Health

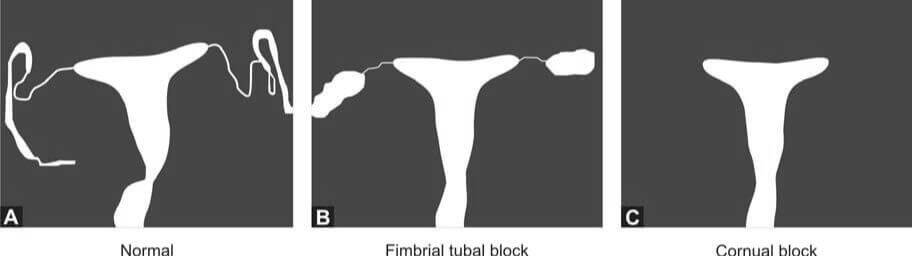

The following investigations are conducted to assess the status of the fallopian tubes and uterus:

- Infectious Disease Testing: This includes an endometrial biopsy for tuberculosis and a test for Chlamydial IgG antibodies to determine the tubal factor in infertility.

- Hysterosalpingography (HSG): HSG is a radiological contrast study used to examine the shape of the uterine cavity and to detect any blockage in the fallopian tubes. A catheter is inserted into the cervical canal, and a radiocontrast dye is injected into the uterine cavity. Real-time X-ray imaging is then performed to observe the flow of the dye into the uterine cavity, tubes, and its spillage into the uterine cavity.

- Hysterosalpingo-Contrast Sonography: In this procedure, a catheter is introduced into the cervical canal, and an echocontrast fluid is injected into the uterine cavity. The shape of the uterine cavity, the filling of the fallopian tubes, and the spillage of the contrast fluid are observed. Additionally, an ultrasound scan of the pelvis provides information about any existing fibroids or polycystic ovarian disease.

- Laparoscopy and Dye Hydrotubation Test with Hysteroscopy: This test involves inserting a cannula into the cervix and introducing methylene blue dye into the uterine cavity. If the tubes are patent, the dye will be observed spilling from the ends of both tubes. This technique also allows for the visualization of pelvic organs, endometriosis, and pelvic adhesions. If necessary, endometriosis and tubal blockage can be treated during the procedure.

Please note that potential pregnancy and active pelvic or vaginal infection are contraindications to tubal patency tests.

Male Infertility: Causes and Investigations

Male infertility is a health condition that impedes a man's ability to initiate a pregnancy with his female partner. This condition is often associated with complications in sperm production or the creation of healthy sperm. It can also be linked to functional issues that affect the sperm's capacity to fertilize an egg. The causes of male infertility are multifaceted and can include hormonal imbalances, genetic disorders like cystic fibrosis, problems with the testes, and blockages in the genital tract. Environmental factors, lifestyle choices such as smoking or excessive alcohol consumption, infections, certain medications, and sexual dysfunction can also contribute to male infertility. A comprehensive medical evaluation by a specialist, such as a urologist or reproductive endocrinologist, is typically required for accurate diagnosis and effective treatment of this condition.

Male Reproductive System

The male reproductive system is a complex network of organs and structures that work in harmony to ensure the production, maturation, and delivery of spermatozoa.

- Testes: These paired organs, nestled within the scrotal sac, serve as the epicenter of male reproductive function. Their dual role involves both sperm production (spermatogenesis) and the secretion of testosterone—the quintessential male hormone. The testes are home to specialized cells called Leydig cells, which diligently manufacture testosterone.

- Ductal System:

- Epididymis: A coiled tube adjacent to each testis, the epididymis plays a pivotal role in sperm maturation. It provides a nurturing environment for spermatozoa, allowing them to gain motility and acquire the ability to fertilize an egg.

- Vasa Deferentia: These paired ducts extend from the epididymis and serve as conduits for sperm transport. They propel sperm toward their ultimate destination—the ejaculatory ducts.

- Ejaculatory Ducts: These tubes, formed by the union of the vasa deferentia and seminal vesicles, facilitate the release of sperm during ejaculation. Their intricate coordination ensures the efficient delivery of spermatozoa.

- Accessory Glands:

- Seminal Vesicles: Paired and strategically positioned near the base of the bladder, the seminal vesicles contribute significantly to seminal fluid composition. Their secretions provide nourishment and energy to sperm, enhancing their chances of survival.

- Prostate Gland: Singular and walnut-sized, the prostate gland adds its own unique blend to the seminal fluid. Its contributions include enzymes and substances that aid in liquefying semen post-ejaculation.

- Bulbourethral Glands of Cowper: These small glands, often overlooked, secrete a lubricating mucus. This mucus serves as a prelude to ejaculation, ensuring smooth passage for sperm through the urethra.

- Penis: The external organ of copulation, the penis plays a dual role: it houses the urethra (through which urine and semen exit) and serves as the conduit for sexual intercourse.

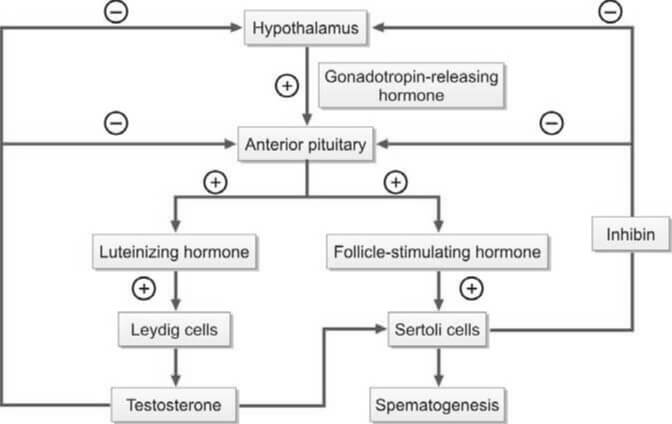

- Hormonal Regulation:

- Gonadotropin-Releasing Hormone (GnRH): Produced by the hypothalamus, GnRH orchestrates the delicate dance of hormonal regulation. It stimulates the anterior pituitary gland to release two crucial gonadotropins: luteinizing hormone (LH) and follicle-stimulating hormone (FSH).

- Luteinizing Hormone (LH): LH's primary target is the Leydig cells within the testes. Upon activation, these cells ramp up testosterone production, essential for spermatogenesis and the development of secondary sexual characteristics.

- Testosterone: This powerhouse hormone not only fuels sperm production but also influences muscle mass, bone density, and libido. Intriguingly, testosterone undergoes conversion to dihydrotestosterone (DHT) within cells, enabling it to exert its androgenic effects.

- Follicle-Stimulating Hormone (FSH): Acting on Sertoli cells within the seminiferous tubules, FSH fine-tunes the maturation process of spermatozoa. These same Sertoli cells also produce inhibin, which, in a neat feedback loop, regulates FSH secretion.

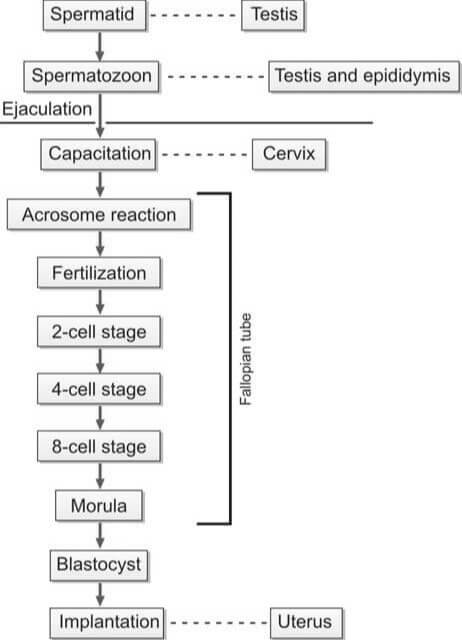

During the act of copulation, seminal fluid is introduced into the female's vagina. This semen undergoes a process known as liquefaction within a span of 20 to 30 minutes, facilitated by the proteolytic enzymes present in the prostatic fluid. For successful in vivo fertilization, the spermatozoa must experience capacitation and the acrosome reaction.

Capacitation is a term that describes the physiological transformations that sperm undergo as they traverse the cervix of the female reproductive tract. Through this process, the sperm gain the ability to (i) initiate the acrosome reaction, (ii) bind to the zona pellucida, and (iii) exhibit hypermotility. The sperm then journey through the cervix and uterus, eventually reaching the fallopian tube.

The binding of the sperm to the zona pellucida triggers the acrosomal reaction, which involves the breakdown of the outer plasma membrane by acrosomal enzymes and its fusion with the outer acrosomal membrane, resulting in the loss of the acrosome. This step is crucial for the fusion of the sperm and oocyte membranes.

Following the acrosomal reaction and the binding of sperm and ovum surface proteins, the sperm penetrates the zona pellucida of the ovum. After a sperm has penetrated, the zona pellucida hardens, preventing further penetration by additional sperm. The fertilization of the egg by the sperm typically occurs in the ampullary section of the fallopian tube.

Factors Contributing to Male Infertility

Male infertility is a complex issue that arises from a multitude of factors that can impact sperm production, motility, or function. It’s often rooted in hormonal imbalances, genetic disorders such as Klinefelter syndrome, and testicular complications including trauma or cancer. Environmental influences, including exposure to harmful toxins, can also play a part. Lifestyle choices, such as smoking, excessive alcohol consumption, and obesity, significantly contribute to male infertility. Furthermore, infections, certain medications, and sexual dysfunction can also be contributing factors. To accurately identify the specific cause, a comprehensive medical evaluation by a urologist or reproductive specialist is typically necessary. Understanding the root cause is the first step towards effective treatment and management of male infertility.

The following are the various factors that can contribute to male infertility:

- Idiopathic: This refers to cases where the cause of infertility remains unknown.

- Hypothalamic-Pituitary Dysfunction (Hypogonadotropic Hypogonadism): This condition involves a disruption in the communication between the hypothalamus and pituitary gland, leading to decreased production of sex hormones.

- Testicular Dysfunction: This category includes a variety of factors:

- Exposure to radiation, cytotoxic drugs, antihypertensives, and antidepressants.

- General factors such as stress, emotional factors, substance abuse (including marijuana, anabolic steroids, and cocaine), alcoholism, heavy smoking, and undernutrition.

- Post-puberty mumps orchitis.

- Varicocele, which is the dilation of the pampiniform plexus of scrotal veins.

- Cryptorchidism, or undescended testes.

- Endocrine disorders such as diabetes mellitus and thyroid dysfunction.

- Genetic disorders including Klinefelter’s syndrome, microdeletions in the Y chromosome, autosomal Robertsonian translocation, immotile cilia syndrome (Kartagener’s syndrome), cystic fibrosis, and defects in the androgen receptor gene.

- Dysfunction of Passages and Accessory Sex Glands: This includes:

- Infections of the epididymis caused by tuberculosis, gonorrhea, or Chlamydia.

- Congenital bilateral absence of the vasa deferentia (often associated with cystic fibrosis) or vasectomy.

- Prostatitis, or inflammation of the prostate gland.

- Dysfunction of Sexual Act: This encompasses:

- Impotence or erectile dysfunction.

- Ejaculation defects such as retrograde ejaculation (where semen is pumped backwards into the bladder), premature ejaculation, or absence of ejaculation.

- Hypospadias, a condition where the opening of the urethra is on the underside of the penis rather than at the tip.

Examination Procedures for Male Infertility

The following are the various procedures involved in the investigation of male infertility:

- Patient History: This encompasses the individual's lifestyle (including heavy smoking and alcohol consumption), sexual habits, instances of erectile dysfunction, ejaculation patterns, history of sexually transmitted diseases, any surgeries performed in the genital area, medication usage, and any systemic illnesses.

- Physical Examination: This involves a thorough examination of the reproductive system, including the size of the testicles, presence of undescended testes, hypospadias, and any scrotal abnormalities such as varicocele. It also includes an assessment of body and facial hair. Varicocele, which can occur bilaterally, is the most common surgically correctable abnormality causing male infertility.

- Semen Analysis: Please refer to the article on Semen Analysis for more information. The evaluation of azoospermia and low semen volume are depicted in Flowcharts 3 and 4, respectively.

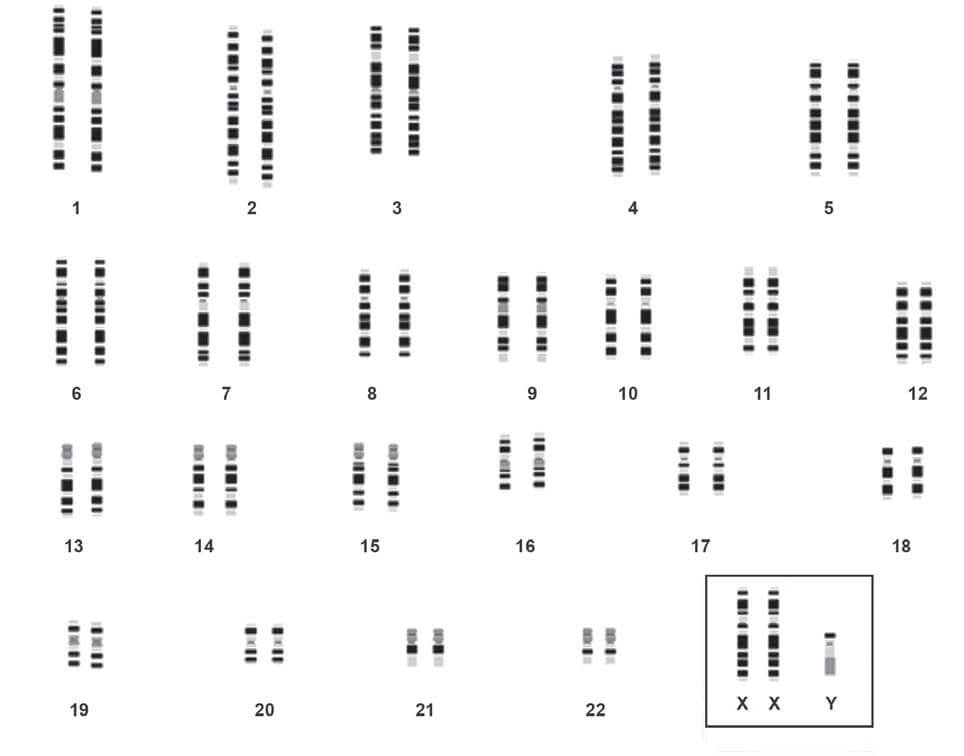

- Chromosomal Analysis: This test can identify conditions such as Klinefelter’s syndrome (e.g., XXY karyotype), deletions in the Y chromosome, and autosomal Robertsonian translocations. If there is a bilateral congenital absence of the vas deferens, screening for the cystic fibrosis carrier state is necessary.

- Hormonal Studies: These involve the measurement of FSH, LH, and testosterone levels to detect any hormonal abnormalities that could lead to testicular failure.

- Testicular Biopsy: A testicular biopsy is recommended when it is not clear whether azoospermia is obstructive or non-obstructive (i.e., normal FSH levels and normal testicular volume).

| Follicle stimulating hormone | Luteinizing hormone | Testosterone | Interpretation |

|---|---|---|---|

| Low | Low | Low | Hypogonadotropic hypogonadism (Hypothalamic or pituitary disorder) |

| High | High | Low | Hypergonadotropic hypogonadism (Testicular disorder) |

| Normal | Normal | Normal | Obstruction of passages, dysfunction of accessory glands |

- Azoospermia (on two separate occasions)

- Normal-sized testes

- Obstructive azoospermia

- Epididymal obstruction by chlamydia or gonorrhea

- Vasectomy

- Congenital bilateral absence of vas

- Genetic testing for cyctic fibrosis gene

- Obstructive azoospermia

- Small or soft testes

- Non-obstructive azoospermia

- Hormonal analysis

- FSH, LH, testosterone levels low

- Hypogonadotropic hypogonadism

- FSH, LH high; testosterone level normal or low

- Primary testicular failure

- Genetic studies for Klinefelter's syndrome

- Primary testicular failure

- FSH, LH, testosterone levels low

- Hormonal analysis

- Non-obstructive azoospermia

- Normal-sized testes

- Semen volume <1ml

- Examination of post-ejaculatory urine

- Positive for sperms

- Retrograde ejaculation

- Negative for sperms

- Transrectal ultrasonography for seminal vesicle abnormality and ejaculatory duct obstruction

- Positive for sperms

- Examination of post-ejaculatory urine

The following are the standard preliminary examinations typically conducted to determine the cause of infertility:

- Semen Analysis: This is a comprehensive examination of a man's semen and sperm to assess fertility.

- Blood Glucose: This test measures the level of glucose (sugar) in the blood and can indicate potential health issues.

- Endocrine Tests: These tests measure the levels of specific hormones in the blood, such as Follicle Stimulating Hormone (FSH), Luteinizing Hormone (LH), and testosterone, which play crucial roles in reproduction.

LABORATORY TESTS FOR GASTRIC ANALYSIS

Hollander’s test (Insulin hypoglycemia test):

In the past, this test was used for confirmation of completeness of vagotomy (done for duodenal ulcer). Hypoglycemia is a potent stimulus for gastric acid secretion and is mediated by vagus nerve. This response is abolished by vagotomy.

In this test, after determining BAO, insulin is administered intravenously (0.15-0.2 units/kg) and acid output is estimated every 15 minutes for 2 hours (8 post-stimulation samples). Vagotomy is considered as complete if, after insulin-induced hypoglycemia (blood glucose < 45 mg/dl), no acid output is observed within 45 minutres.

The test gives reliable results only if blood glucose level falls below 50 mg/dl at some time following insulin injection. It is best carried out after 3-6 months of vagotomy.

The test is no longer recommended because of the risk associated with hypoglycemia. Myocardial infarction, shock, and death have also been reported.

Fractional test meal:

In the past, test meals (e.g. oat meal gruel, alcohol) were administered orally to stimulate gastric secretion and determine MAO or PAO. Currently, parenteral pentagastrin is the gastric stimulant of choice.

Tubeless gastric analysis:

This is an indirect and rapid method for determining output of free hydrochloric acid in gastric juice. In this test, a cationexchange resin tagged to a dye (azure A) is orally administered. In the stomach, the dye is displaced from the resin by the free hydrogen ions of the hydrochloric acid. The displaced azure A is absorbed in the small intestine, enters the bloodstream, and is excreted in urine. Urinary concentration of the dye is measured photometrically or by visual comparison with known color standards. The quantity of the dye excreted is proportional to the gastric acid output. However, if kidney or liver function is impaired, false results may be obtained. The test is no longer in use.

Spot check of gastric pH:

According to some investigators, spot determination of pH of fasting gastric juice (obtained by nasogastric intubation) can detect the presence of hypochlorhydria (if pH>5.0 in men or >7.0 in women).

Congo red test during esophagogastroduodenoscopy:

This test is done to determine the completeness of vagotomy. Congo red dye is sprayed into the stomach during esophagogastroduodenoscopy; if it turns red, it indicates presence of functional parietal cells in stomach with capacity of producing acid.

REFERENCE RANGES

- Volume of gastric juice: 20-100 ml

- Appearance: Clear

- pH: 1.5 to 3.5

- Basal acid output: Up to 5 mEq/hour

- Peak acid output: 1 to 20 mEq/hour

- Ratio of basal acid output to peak acid output: <0.20 or < 20%

CONTRAINDICATIONS TO GASTRIC ANALYSIS

- Gastric intubation for gastric analysis is contraindicated in esophageal stricture or varices, active nasopharyngeal disease, diverticula, malignancy, recent history of severe gastric hemorrhage, hypertension, aortic aneurysm, cardiac arrhythmias, congestive cardiac failure, or non-cooperative patient.

- Pyloric stenosis: Obstruction of gastric outlet can elevate gastric acid output due to raised gastrin (following antral distension).

- Pentagastrin stimulation is contraindicated in cases with allergy to pentagastrin, and recent severe gastric hemorrhge due to peptic ulcer disease.

- It is an invasive and cumbersome technique that is traumatic and unpleasant for the patient.

- Information obtained is not diagnostic in itself.

- Availability of better tests for diagnosis such as endoscopy and radiology (for suspected peptic ulcer or malignancy); serum gastrin estimation (for ZE syndrome); vitamin assays, Schilling test, and antiparietal cell antibodies (for pernicious anemia); and tests for Helicobacter pylori infection (in duodenal or gastric ulcer).

- Availability of better medical line of treatment that obviates need for surgery in many patients.

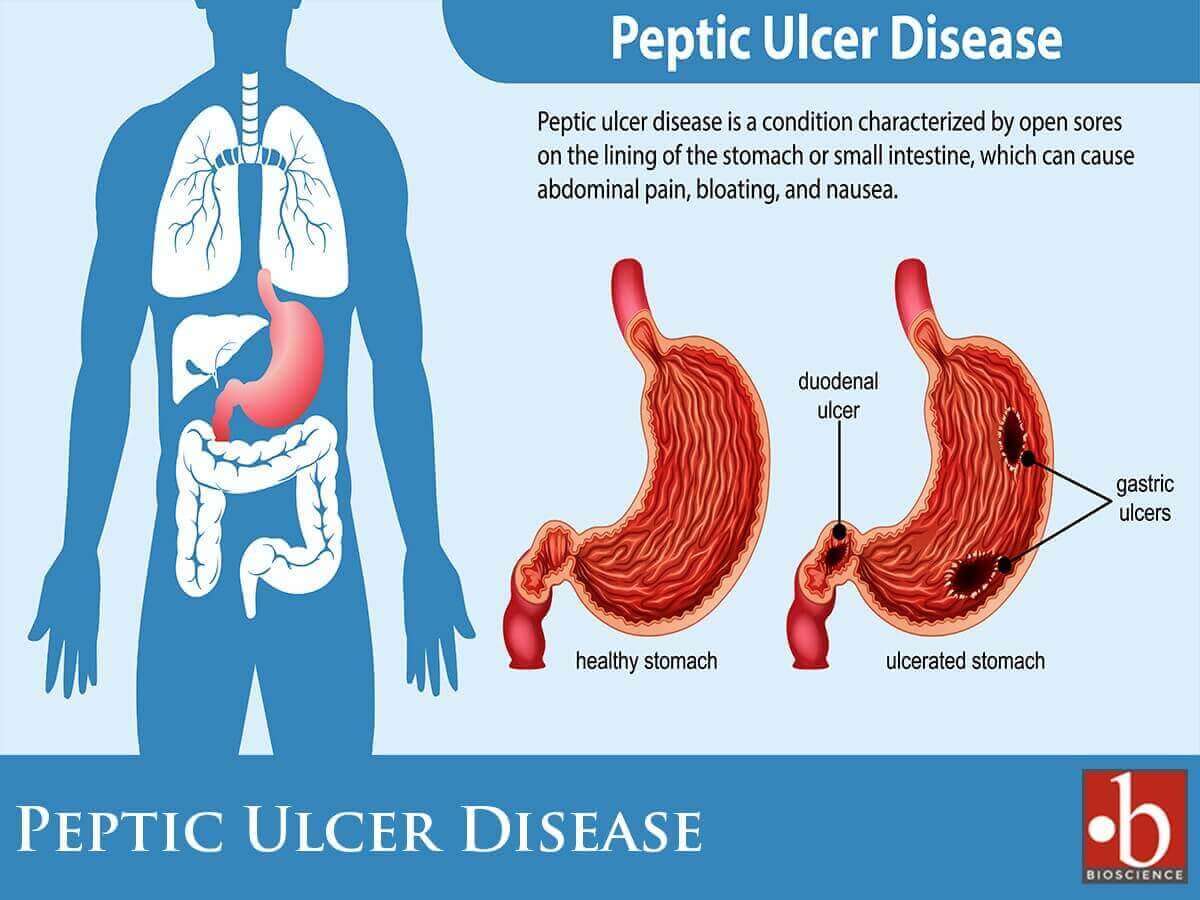

Indications for Gastric Analysis

Gastric analysis involves the assessment of the quantity of acid produced by the stomach through the analysis of aspirated gastric juice samples. The estimation of gastric acid output encompasses both the baseline (basal) and the maximum (peak) levels achieved after the stimulation of parietal cells. Originally introduced primarily for evaluating peptic ulcer disease and determining the necessity for surgical intervention, the test’s significance has diminished over time. This decline is attributed to the decreased prevalence of peptic ulcer disease and the widespread availability of safe and efficacious medical treatments, thereby diminishing the prominence of surgical interventions.

Gastric analysis is a diagnostic procedure assessing the composition and activity of gastric juices, is employed in various clinical scenarios. Indications for gastric analysis include:

- To determine the cause of recurrent peptic ulcer disease:

- To detect Zollinger-Ellison (ZE) syndrome: Zollinger-Ellison (ZE) syndrome stands as a rare pathology characterized by the development of multiple mucosal ulcers in the stomach, duodenum, and upper jejunum, attributed to a pronounced hypersecretion of stomach acid. This excessive acid secretion finds its roots in a gastrin-producing tumor originating in the pancreas. The diagnostic journey for ZE syndrome often involves gastric analysis, aiming to identify significantly heightened basal and pentagastrin-stimulated gastric acid outputs. Additionally, a more nuanced and specific diagnostic approach leverages the measurement of serum gastrin levels, both in the fasting state and under secretin stimulation. This multifaceted diagnostic strategy not only aids in confirming ZE syndrome but also plays a crucial role in assessing the responsiveness to acid-suppressant therapies.

- To decide about completeness of vagotomy following surgery for peptic ulcer disease: The Hollander’s Test, also known as the Hollander-Wolff test, is a medical procedure used to evaluate gastric acid secretion. This test involves the administration of a histamine analog, typically histalog, to stimulate the release of gastric acid. The patient undergoes gastric analysis, and the acid output is measured in response to histalog stimulation. The Hollander’s Test is particularly valuable in assessing the function of parietal cells in the stomach, which are responsible for acid production. This procedure aids in diagnosing conditions related to gastric acid secretion, such as peptic ulcer disease or Zollinger-Ellison syndrome. During the test, gastric juice is aspirated, and the acid output is analyzed before and after the administration of histalog. It is used for the management and treatment of various gastrointestinal disorders. See Hollander’s test.

- To determine the cause of raised fasting serum gastrin level: Elevated levels of gastrin, known as hypergastrinemia, may manifest in various clinical conditions such as achlorhydria, Zollinger-Ellison syndrome, and antral G cell hyperplasia.

- To support the diagnosis of pernicious anemia (PA): Pernicious anemia stems from the impaired absorption of vitamin B12, resulting from the breakdown in intrinsic factor synthesis due to gastric mucosal atrophy. This condition is further marked by the absence of hydrochloric acid in the gastric juice, a state referred to as achlorhydria. In cases where facilities for vitamin assays and Schilling’s test are unavailable, gastric analysis becomes a valuable tool for demonstrating achlorhydria. It is crucial to note, however, that achlorhydria alone is insufficient for the definitive diagnosis of pernicious anemia.

- To distinguish between benign and malignant ulcer: Excessive acid secretion characterizes duodenal peptic ulcers, whereas gastric carcinoma is associated with a deficiency in acid production known as achlorhydria. However, anacidity is observed only in a limited number of cases involving advanced gastric cancer. Additionally, it’s worth noting that increased acid output is not universally present in all individuals with duodenal ulcers.

- To measure the amount of acid secreted in a patient with symptoms of peptic ulcer dyspepsia but normal X-ray findings: Excess acid secretion in such cases is indicative of duodenal ulcer. However, hypersecretion of acid does not always occur in duodenal ulcer.

- To decide the type of surgery to be performed in a patient with peptic ulcer: Elevated basal and peak acid outputs signify an augmentation in parietal cell mass, suggesting the necessity for gastrectomy. Conversely, an elevated basal acid output coupled with a normal peak output serves as an indicator for vagotomy.

Method of Gastric Analysis

To evaluate gastric acid secretion, the stomach's acid output is measured both in a fasting state and post the administration of a stimulating drug. The Basal Acid Output (BAO) represents the quantity of hydrochloric acid (HCl) secreted without external stimuli (visual, olfactory, or auditory). The Maximum Acid Output (MAO) quantifies the HCl secreted by the stomach when stimulated by pentagastrin, calculated from the initial four 15-minute samples post-stimulation. For assessing the greatest possible acid secretory capacity, the Peak Acid Output (PAO) is derived from the two highest consecutive 15-minute samples, preferred for its enhanced reproducibility. The acidity level is determined through titration methods.

Collection of Sample

All medications influencing gastric acid secretion, including antacids, anticholinergics, cholinergics, H2-receptor antagonists, antihistamines, tranquilizers, antidepressants, and carbonic anhydrase inhibitors, must be withheld for 24 hours before the examination. Proton pump inhibitors require discontinuation 5 days prior to the test. To ensure accurate results, patients should be in a relaxed state, devoid of any sensory stimulation sources.

No food or drink is allowed after midnight preceding the test. Gastric juice can be obtained through an oral or nasogastric tube, either during endoscopy or through aspiration.

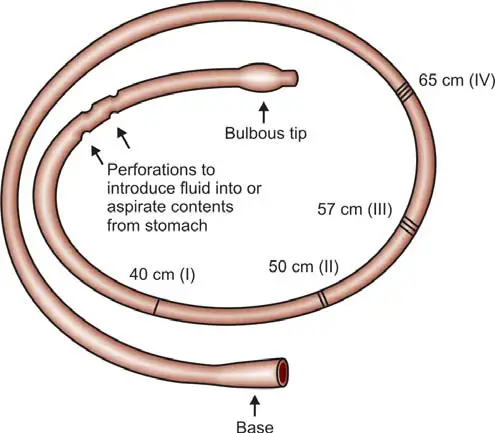

The commonly used oral or nasogastric tube (depicted in Figure 1) is a flexible, narrow-diameter tube with a weighted bulbous end, facilitating gastric juice entry through perforations. Its radiopaque feature allows precise positioning in the stomach's most dependent part under fluoroscopic or X-ray guidance. Lubricated for ease, the tube can be introduced via the mouth or nose while the patient is seated or reclined on the left side. Markings on the tube's outer surface correspond to distances from the teeth: 40 cm (tip to cardioesophageal junction), 50 cm (body of stomach), 57 cm (pyloric antrum), and 65 cm (duodenum). Tube placement can be verified by fluoroscopy or the ‘water recovery test’, where the recovery of over 90% of introduced water indicates proper placement. Typically, the tube is positioned in the antrum, and a syringe is attached for gastric juice aspiration.

For BAO Estimation: Samples are collected in the morning after a 12-hour overnight fast. Initial gastric secretion accumulated overnight is aspirated and discarded. Subsequently, gastric secretions are aspirated at 15-minute intervals for 1 hour, resulting in a total of 4 consecutive samples. All samples undergo centrifugation to remove particulate matter. Each 15-minute sample is analyzed for volume, pH, and acidity. The acid output in the four samples is totaled and expressed as the concentration of acid in milliequivalents per hour or in mmol per hour.

After Gastric Juice Collection for BAO Determination: Following this, the patient receives a subcutaneous or intramuscular injection of pentagastrin (6 μg/kg of body weight). Immediately afterward, gastric secretions are aspirated at 15-minute intervals for 1 hour for the estimation of MAO or PAO. MAO is calculated from the first four 15-minute samples after stimulation, while PAO is derived from two consecutive 15-minute samples showing the highest acidity.

Titration

Box 1: Determination of basal acid output, maximum acid output, and peak acid output

- Basal acid output (BAO) = Total acid content in all four 15-minute basal samples in mEq/L

- Maximum acid output (MAO) = Total acid content in all four 15-minute post-pentagastrin samples in mEq/L

- Peak acid output (PAO) = Sum of two consecutive 15-minute post-pentagastrin samples showing highest acidity ×2 (mEq/L)

Gastric acidity assessment involves titration, where the endpoint is determined by observing the change in color of the indicator solution or reaching the desired pH.

In this process, 0.1 N sodium hydroxide, an alkali solution, is incrementally added from a graduated vessel (burette) to a known volume of acid (gastric juice) until the equivalence point of the reaction is achieved. The concentration of acid is then determined based on the concentration and volume of alkali required for neutralizing the specific volume of gastric juice. Acid concentration is expressed in milliequivalents per liter or mmol per liter.

Free acidity signifies the concentration of HCl present in a free, uncombined form in the solution. The volume of alkali added to the gastric juice until Topfer’s reagent (an earlier-added indicator) changes color or when the pH reaches 3.5 is a measure of free acidity. A screening test for free HCl in gastric juice involves observing a red color after adding Topfer’s reagent to an aliquot. The presence of free HCl excludes the diagnosis of pernicious anemia (achlorhydria).

Combined acidity encompasses HCl combined with proteins and mucin, including small amounts of weak acids in gastric juice.

Total acidity is the summation of free and combined acidity. The amount of alkali added to gastric juice until phenolphthalein indicator (previously added to the gastric juice) changes color is indicative of total acidity (Box 1).

Interpretation of Results

- Volume: Normal total volume is 20-100 ml (usually < 50 ml). Causes of increased volume of gastric juice are—

- Color: Normal gastric secretion is colorless, with a faintly pungent odor. Fresh blood (due to trauma, or recent bleeding from ulcer or cancer) is red in color. Old hemorrhage produces a brown, coffee-ground like appearance (due to formation of acid hematin). Bile regurgitation produces a yellow or green color.

- pH: Normal pH is 1.5 to 3.5. In pernicious anemia, pH is greater than 7.0 due to absence of HCl.

- Basal acid output:

- Normal: Up to 5 mEq/hour.

- Duodenal ulcer: 5-15 mEq/hour.

- Zollinger-Ellison syndrome: >20 mEq/hour.

- Peak acid output:

- Normal: 1-20 mEq/hour.

- Duodenal ulcer: 20-60 mEq/hour.

- Zollinger-Ellison syndrome: > 60 mEq/hour.

- Achlorhydria: 0 mEq/hour.

- Normal PAO is seen in gastric ulcer and gastric carcinoma. Values up to 60 mEq/hour can occur in some normal individuals and in some patients with Zollinger-Ellison syndrome.

- In pernicious anemia, there is no acid output due to gastric mucosal atrophy. Achlorhydria should be diagnosed only if there is no free HCl even after maximum stimulation.

- Ratio of basal acid output to peak acid output (BAO/PAO):

- Normal: < 0.20 (or < 20%).

- Gastric or duodenal ulcer: 0.20-0.40 (20-40%).

- Duodenal ulcer: 0.40-0.60 (40-60%).

- Zollinger-Ellison syndrome: > 0.60 (> 60%).

- Normal values occur in gastric ulcer or gastric carcinoma.

Alterations in gastric acid output are linked to various conditions, as outlined in Table 1.

Importantly, the values of acid output, while significant, should not be considered diagnostic in isolation. Correlation with clinical, radiological, and endoscopic features is essential for a comprehensive evaluation.

| Increased gastric acid output | Decreased gastric acid output |

|---|---|

|

|

- Pathology

- Clinical Pathology

- Pathology Notes

- Notes

- Article

- How to

- Method of Gastric Analysis

- Gastric Analysis Method

- Laboratory

- Laboratory Technique

- Laboratory Test Procedure

- Diseases and Disorders

- Method for Combined Gastrointestional Feeding and Aspiration

- Gastric Aspiration

- Gastric Problems

- Gastric Ulcer

Microscopic Examination of Feces

Microscopic examination of feces is a crucial diagnostic tool in identifying various infections and conditions related to the gastrointestinal tract. This process involves studying small samples of fecal material under a microscope to detect the presence of parasites, eggs, larvae, and other microorganisms.

Microscopic examinations done on fecal sample are shown in Flowchart 1.

- Microscopic examination of feces

- Direct wet mount

- For Eggs/larvae of helminths and trophozoites/cysts of protozoa

- If negative, concentration technique

- For Eggs/larvae of helminths and trophozoites/cysts of protozoa

- Special stains

- (A) Trichrome stain for indentification of trophozoites and cysts

(B) AFB stain for oocysts of Cryptosporidium Cyclospora, and Isospora

(C) Wright's stain for white blood cells

- (A) Trichrome stain for indentification of trophozoites and cysts

- Cellophane technique

- For eggs of Enterobius vermicularis

- Direct wet mount

Collection of Specimen for Parasites

Collect a small amount of stool (at least 4 ml or 4 cm³) in a clean container with a tight lid, like a tin box, plastic box, glass jar, or waxed cardboard box. Take 20-40 grams of formed stool or 5-6 tablespoons of watery stool. Make sure it's not mixed with urine, water, soil, or menstrual blood. Trophozoites of Entameba histolytica degrade quickly, so bring the sample to the lab immediately. Parasites are best seen in warm, fresh stools, so examine them within an hour of collection. If there's a delay, refrigerate the sample. Use a fixative with 10% formalin or polyvinyl alcohol if transporting to another lab.

Getting one negative result for parasites doesn't mean there's no infection. To be thorough, take three samples on separate days, with a 3-day gap.

For accurate results, the patient should avoid oily laxatives, antidiarrheal meds, bismuth, tetracycline antibiotics, or antacids for a week before the stool exam. Also, no barium swallow examination.

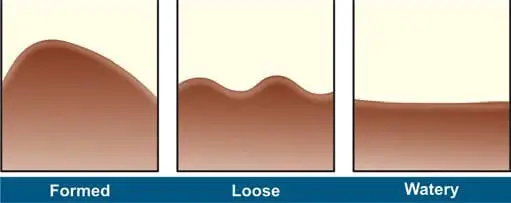

In the lab, check the stool for consistency (watery, loose, soft, or formed), color, odor, and the presence of blood, mucus, adult worms, or tapeworm segments. See Figure 1 for details.

Color/Appearance of Fecal Specimens

- Brown: Normal

- Black: Bleeding in upper gastrointestinal tract (proximal to cecum), Drugs (iron salts, bismuth salts, charcoal)

- Red: Bleeeding in large intestine, undigested tomatoes or beets

- Clay-colored (gray-white): Biliary obstruction

- Silvery: Carcinoma of ampulla of Vater

- Watery: Certain strains of Escherichia coli, Rotavirus enteritis, cryptosporidiosis

- Rice water: Cholera

- Unformed with blood and mucus: Amebiasis, inflammatory bowel disease

- Unformed with blood, mucus, and pus: Bacillary dysentery

- Unformed, frothy, foul smelling, which float on water: Steatorrhea.

Preparation of Slides

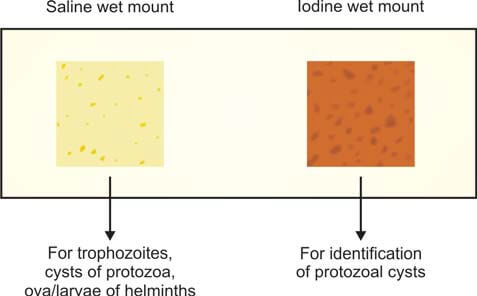

In the lab, we make saline and iodine wet mounts of the sample (see Figure 2).

Here's how it's done: On a glass slide, put a drop of normal saline on one end and a drop of Lugol iodine solution on the other. Take a small bit of feces (about the size of a match-head) and mix it with a drop each of saline and iodine, using a wire loop. Cover each preparation with a cover slip. If there's blood or mucus in the specimen, include that part for examination (trophozoites are often found in mucus). If the stools are liquid, pick the surface portion for examination.

The saline wet mount helps show eggs, larvae of helminths, and trophozoites and cysts of protozoa. It can also detect red and white cells. Iodine stains glycogen and nuclei of cysts. The iodine wet mount is handy for identifying protozoal cysts. Trophozoites stop moving in iodine mounts. If the stool is liquid or diarrheal, you can check it directly without adding saline.

Concentration Procedure

If there are only a few parasites, concentrating the fecal specimen helps. But, it's a trade-off—while it makes it harder to detect amebic trophozoites, it's necessary when wet mount examination doesn't find anything, and there's a suspicion of a parasitic infection. This method is useful for spotting ova, cysts, and larvae of parasites.

There are two main types of concentration techniques:

- Sedimentation techniques: Ova and cysts settle at the bottom, but too much debris can make it tricky to find parasites. For example, there's the Formolethyl acetate sedimentation procedure.

- Floatation techniques: Ova and cysts float on the surface, but not all of them float in this method. Examples include the Saturated salt floatation technique and zinc sulphate concentration technique.

The commonly used sedimentation method is the Formol-ethyl acetate concentration method because:

- It can detect eggs, larvae of almost all helminths, and cysts of protozoa.

- It preserves their shape well.

- It's rapid.

- There's minimal risk of infection for lab workers because formalin kills pathogens.

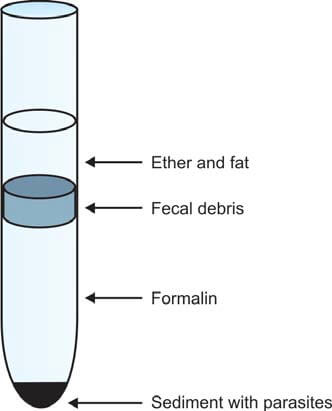

Here's how it works: Make a fecal suspension in 10% formalin (10 ml formalin + 1 gram feces). Pass it through a gauze filter until you get 7 ml of filtered material. Add ethyl acetate (3 ml), then centrifuge the mixture for 1 minute. Eggs, larvae, and cysts settle at the bottom (see Figure 3). Remove the layers above the deposit—formalin, fecal debris, and ether. Loosen the debris, pour off the supernatant, and place a drop of sediment on each end of a glass slide. Stain one drop with iodine, cover slips, and examine it under the microscope.

Classification of Intestinal Parasites of Humans

Humans' intestinal parasites fall into two main groups: protozoa and metazoa (helminths) (see Flowchart 2).

- Intestinal parasites

- Protozoa

- Amebae: Entamoeba histolytica

Flagellates: Gardia lamblia

Ciliates: Balantidium coli

Coccidia: Isospora belli, Cryptosporidium parvum, Cyclospora cayetanensis

Microsporidia

- Amebae: Entamoeba histolytica

- Helminths

- Nemathelminthes

- Ascaris lumbricoides

Enterobius vermicularis

Ancylostoma duodenale

Necator americanus

Strongyloides stercoralis

Trichuris trichiura

- Ascaris lumbricoides

- Platyhelminthes

- Trematodes

- Fasciolopsis buski

- Cestodes

- Taenia saginata

Taenia solium

Hymenolepis nana

Diphyllobothrium latum

- Taenia saginata

- Trematodes

- Nemathelminthes

- Protozoa

Summary

Microscopic examination of feces is a crucial diagnostic tool in identifying various infections and conditions related to the gastrointestinal tract. This process involves studying small samples of fecal material under a microscope to detect the presence of parasites, eggs, larvae, and other microorganisms.

Procedure

- Sample Collection: A small amount of stool is collected and prepared for examination. The sample should be free from contamination with urine, water, or soil.

- Wet Mount Preparation: The sample is mixed with normal saline or iodine solution on a glass slide. This helps in observing live organisms and provides information on their motility and characteristics.

- Concentration Techniques: In some cases, concentration methods are used to increase the chances of detecting parasites. Sedimentation and floatation techniques are common, helping to separate parasites from fecal debris.

- Microscopic Observation: The prepared slides are examined under a microscope. The pathologist looks for parasites, cysts, eggs, larvae, and other relevant structures. The examination may also include assessing the color, consistency, and presence of blood or mucus.

Significance

- Parasitic Infections: Microscopic examination helps identify a wide range of parasitic infections, including protozoa and helminths.

- Disease Diagnosis: It aids in diagnosing conditions such as amoebiasis, giardiasis, and various helminth infections.

- Treatment Monitoring: Monitoring the presence of parasites is essential to track the effectiveness of treatment and ensure the elimination of the infection.

In Brief

Microscopic examination of feces is a valuable tool in the hands of pathologists to diagnose and monitor gastrointestinal infections. It allows for a detailed analysis of the fecal sample, aiding in the timely and accurate identification of parasites and other microscopic elements that may indicate an underlying health issue.

CHEMICAL EXAMINATION OF FECES

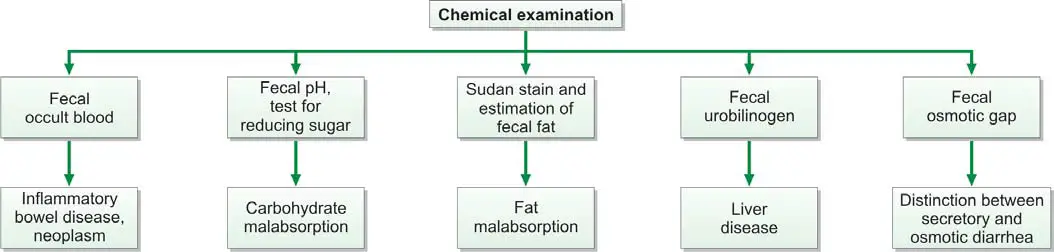

Chemical examination of feces is usually carried out for the following tests (Figure 845.1):

- Occult blood

- Excess fat excretion (malabsorption)

- Urobilinogen

- Reducing sugars

- Fecal osmotic gap

- Fecal pH

Test for Occult Blood in Stools

Presence of blood in feces which is not apparent on gross inspection and which can be detected only by chemical tests is called as occult blood. Causes of occult blood in stools are:

- Intestinal diseases: hookworms, amebiasis, typhoid fever, ulcerative colitis, intussusception, adenoma, cancer of colon or rectum.

- Gastric and esophageal diseases: peptic ulcer, gastritis, esophageal varices, hiatus hernia.

- Systemic disorders: bleeding diathesis, uremia.

- Long distance runners.

Occult blood test is recommended as a screening procedure for detection of asymptomatic colorectal cancer. Yearly examinations should be carried out after the age of 50 years. If the test is positive, endoscopy and barium enema are indicated.

Tests for detection of occult blood in feces: Many tests are available which differ in their specificity and sensitivity. These tests include tests based on peroxidase-like activity of hemoglobin (benzidine, orthotolidine, aminophenazone, guaiac), immunochemical tests, and radioisotope tests.

Tests Based on Peroxidase-like Activity of Hemoglobin

Principle: Hemoglobin has peroxidase-like activity and releases oxygen from hydrogen peroxide. Oxygen molecule then oxidizes the chemical reagent (benzidine, orthotolidine, aminophenazone, or guaiac) to produce a colored reaction product.

Benzidine and orthotolidine are carcinogenic and are no longer used. Benzidine test is also highly sensitive and false-positive reactions are common. Since bleeding from the lesion may be intermittent, repeated testing may be required.

Causes of False-positive Tests

- Ingestion of peroxidase-containing foods like red meat, fish, poultry, turnips, horseradish, cauliflower, spinach, or cucumber. Diet should be free from peroxidase-containing foods for at least 3 days prior to testing.

- Drugs like aspirin and other anti-inflammatory drugs, which increase blood loss from gastrointestinal tract in normal persons.

Causes of False-negative Tests

- Foods containing large amounts of vitamin C.

- Conversion of all hemoglobin to acid hematin (which has no peroxidase-like activity) during passage through the gastrointestinal tract.

Immunochemical Tests

These tests specifically detect human hemoglobin. Therefore there is no interference from animal hemoglobin or myoglobin (e.g. meat) or peroxidase-containing vegetables in the diet.

The test consists of mixing the sample with latex particles coated with anti-human haemoglobin antibody, and if agglutination occurs, test is positive. This test can detect 0.6 ml of blood per 100 grams of feces.

Radioisotope Test Using 51Cr

In this test, 10 ml of patient’s blood is withdrawn, labeled with 51Cr, and re-infused intravenously. Radioactivity is measured in fecal sample and in simultaneously collected blood specimen. Radioactivity in feces indicates gastrointestinal bleeding. Amount of blood loss can be calculated. Although the test is sensitive, it is not suitable for routine screening.

Apt test: This test is done to decide whether blood in the vomitus or in the feces of a neonate represents swallowed maternal blood or is the result of bleeding in the gastrointestinal tract. The test was devised by Dr. Apt and hence the name. The baby swallows blood during delivery or during breastfeeding if nipples are cracked. Apt test is based on the principle that if blood is of neonatal origin it will contain high proportion of hemoglobin F (Hb F) that is resistant to alkali denaturation. On the other hand, maternal blood mostly contains adult hemoglobin or Hb A that is less resistant.

Test for Malabsorption of Fat

Dietary fat is absorbed in the small intestine with the help of bile salts and pancreatic lipase. Fecal fat mainly consists of neutral fats (unsplit fats), fatty acids, and soaps (fatty acid salts). Normally very little fat is excreted in feces (<7 grams/day in adults). Excess excretion of fecal fat indicates malabsorption and is known as steatorrhea. It manifests as bulky, frothy, and foul-smelling stools, which float on the surface of water.

Causes of Malabsorption of Fat

- Deficiency of pancreatic lipase (insufficient lipolysis): chronic pancreatitis, cystic fibrosis.

- Deficiency of bile salts (insufficient emulsification of fat): biliary obstruction, severe liver disease, bile salt deconjugation due to bacterial overgrowth in the small intestine.

- Diseases of small intestine: tropical sprue, celiac disease, Whipple’s disease.

Tests for fecal fat are qualitative (i.e. direct microscopic examination after fat staining), and quantitative (i.e. estimation of fat by gravimetric or titrimetric analysis).

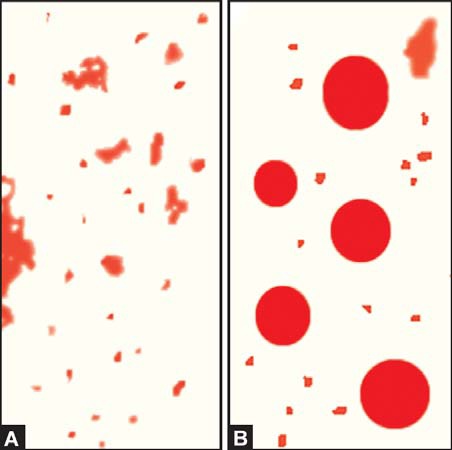

- Microscopic stool examination after staining for fat: A random specimen of stool is collected after putting the patient on a diet of >80 gm fat per day. Stool sample is stained with a fat stain (oil red O, Sudan III, or Sudan IV) and observed under the microscope for fat globules (Figure 845.2). Presence of ≥60 fat droplets/HPF indicates steatorrhea. Ingestion of mineral or castor oil and use of rectal suppositories can cause problems in interpretation.

- Quantitative estimation of fecal fat: The definitive test for diagnosis of fat malabsorption is quantitation of fecal fat. Patient should be on a diet of 70-100 gm of fat per day for 6 days before the test. Feces are collected over 72 hours and stored in a refrigerator during the collection period. Specimen should not be contaminated with urine. Fat quantitation can be done by gravimetric or titrimetric method. In gravimetric method, an accurately weighed sample of feces is emulsified, acidified, and fat is extracted in a solvent; after evaporation of solvent, fat is weighed as a pure compound. Titrimetric analysis is the most widely used method. An accurately weighed stool sample is treated with alcoholic potassium hydroxide to convert fat into soaps. Soaps are then converted to fatty acids by the addition of hydrochloric acid. Fatty acids are extracted in a solvent and the solvent is evaporated. The solution of fat made in neutral alcohol is then titrated against sodium hydroxide. Fatty acids comprise about 80% of fecal fat. Values >7 grams/day are usually abnormal. Values >14 grams/day are specific for diseases causing fat malabsorption.

Test for Urobilinogen in Feces

Fecal urobilinogen is determined by Ehrlich’s aldehyde test (see Article “Test for Detection of Urobilinogen in Urine”). Specimen should be fresh and kept protected from light. Normal amount of urobilinogen excreted in feces is 50-300 mg per day. Increased fecal excretion of urobilinogen is seen in hemolytic anemia. Urobilinogen is deceased in biliary tract obstruction, severe liver disease, oral antibiotic therapy (disturbance of intestinal bacterial flora), and aplastic anemia (low hemoglobin turnover). Stools become pale or clay-colored if urobilinogen is reduced or absent.

Test for Reducing Sugars

Deficiency of intestinal enzyme lactase is a common cause of malabsorption. Lactase converts lactose (in milk) to glucose and galactose. If lactase is deficient, lactose is converted to lactic acid with production of gas. In infants this leads to diarrhea, vomiting, and failure to thrive. Benedict’s test or Clinitest™ tablet test for reducing sugars is used to test freshly collected stool sample for lactose. In addition, oral lactose tolerance test is abnormal (after oral lactose, blood glucose fails to rise above 20 mg/dl of basal value) in lactase deficiency. Rise in blood glucose indicates that lactose has been hydrolysed and absorbed by the mucosa. Lactose tolerance test is now replaced by lactose breath hydrogen testing. In lactase deficiency, accumulated lactose in the colon is rapidly fermented to organic acids and gases like hydrogen. Hydrogen is absorbed and then excreted through the lungs into the breath. Amount of hydrogen is then measured in breath; breath hydrogen more than 20 ppm above baseline within 4 hours indicates positive test.

Fecal Osmotic Gap

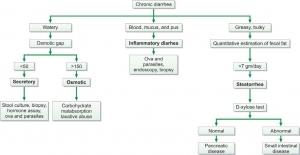

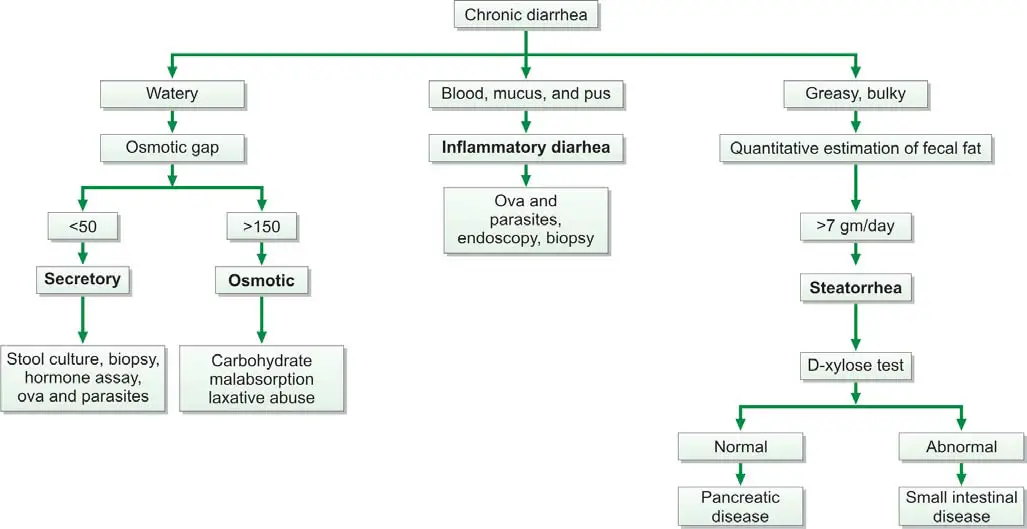

Fecal osmotic gap is calculated from concentration of electrolytes in stool water by formula 290-2([Na+] + [K+]). (290 is the assumed plasma osmolality). In osmotic diarrheas, osmotic gap is >150 mOsm/kg, while in secretory diarrhea, it is typically below 50 mOsm/kg. Evaluation of chronic diarrhea is shown in Figure 845.3.

Fecal pH

Stool pH below 5.6 is characteristic of carbohydrate malabsorption.

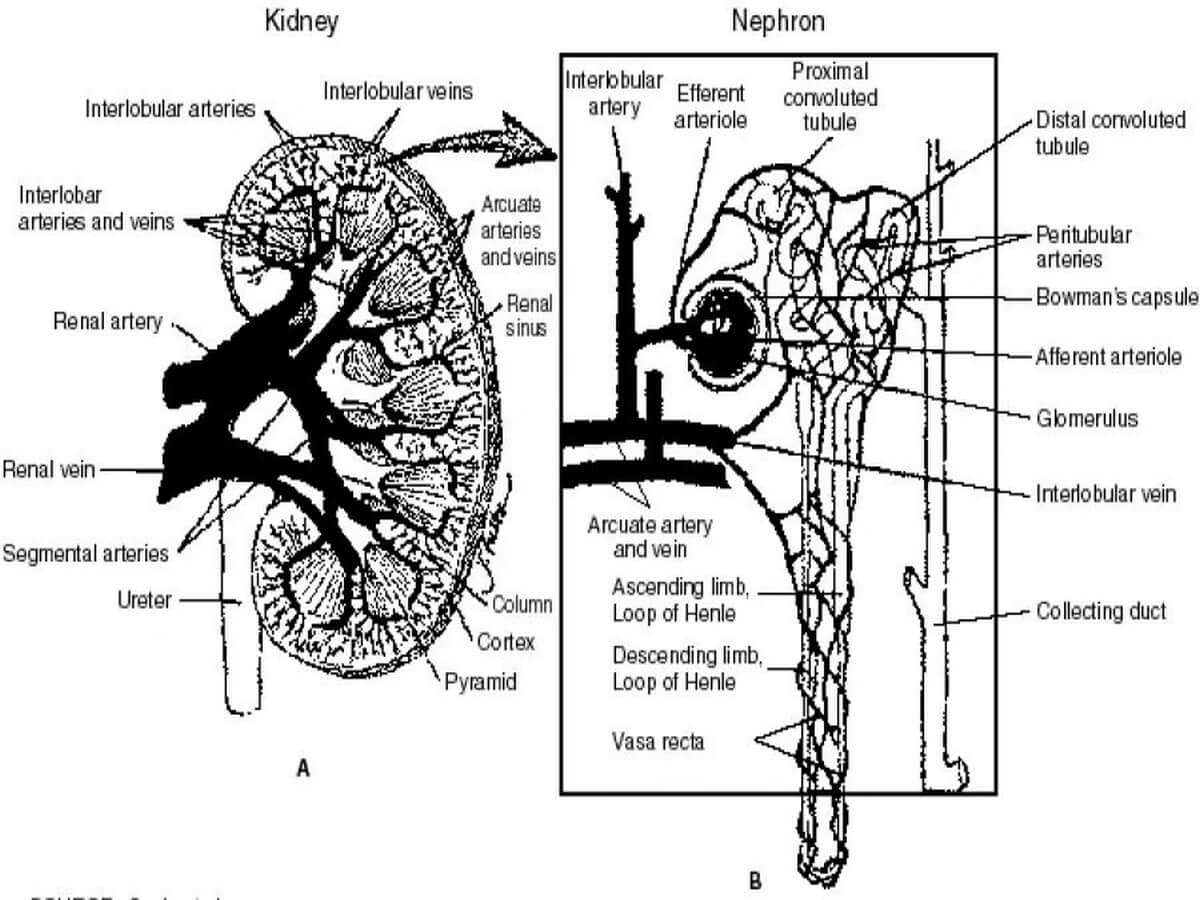

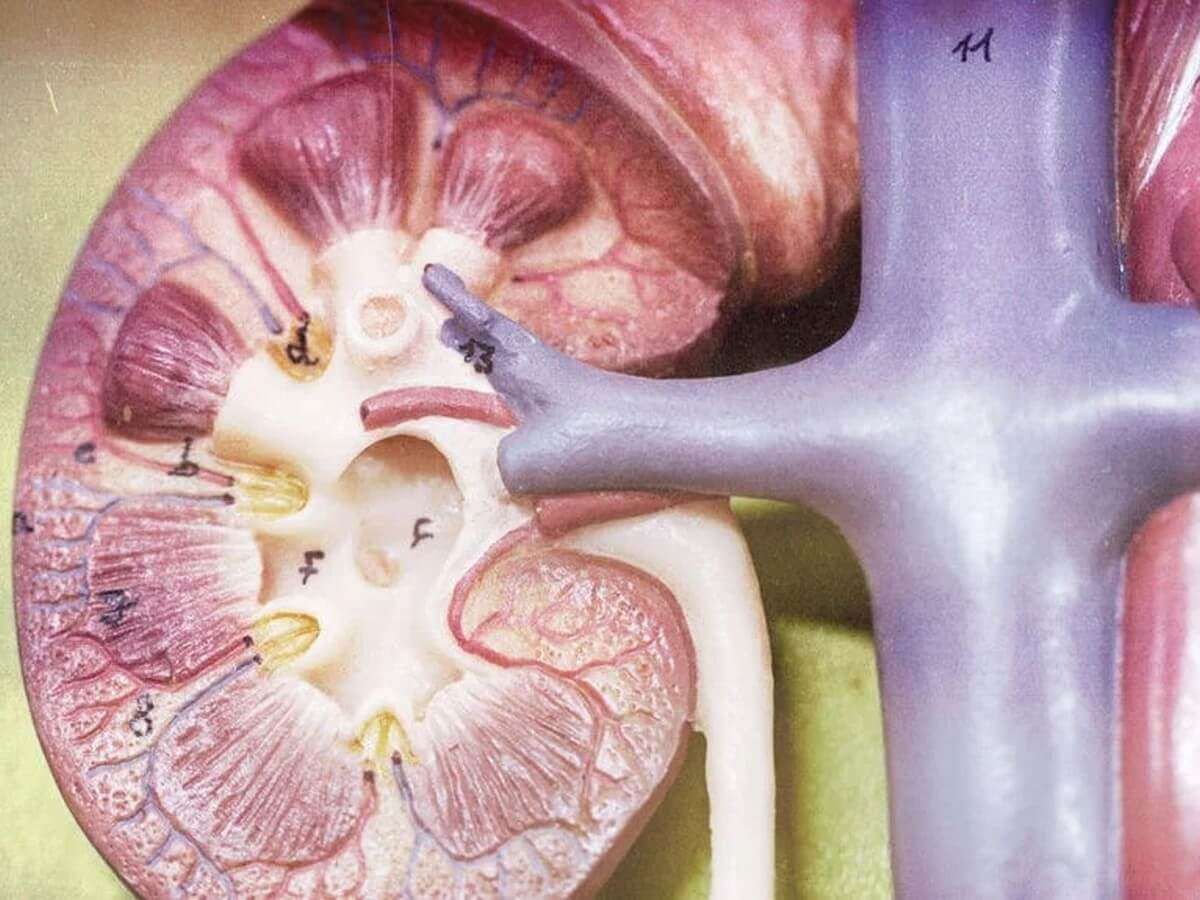

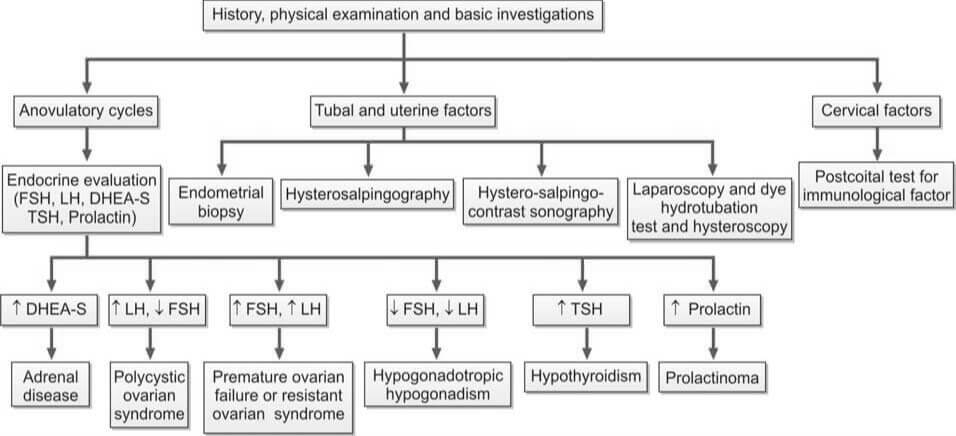

Laboratory Tests to Evaluate Tubular Function

These diagnostic assessments are designed to evaluate the performance of two crucial components of the kidney – the proximal and distal tubules. Proximal tubular function tests, such as Fractional Excretion of Sodium (FENa) and Tubular Reabsorption of Phosphate (TRP), gauge the efficiency of reabsorption in the proximal tubule. On the other hand, tests for distal tubular function, like the Urine Acidification Test, focus on the tubule's ability to maintain the body's acid-base balance. These tests play an important role in diagnosing renal disorders by providing valuable information on the specific functionalities of these intricate renal structures.

Tests to Assess Proximal Tubular Function

The renal tubules play a crucial role in reabsorbing 99% of the glomerular filtrate to retain vital substances such as glucose, amino acids, and water.

Glycosuria

Renal glycosuria manifests as the excretion of glucose in urine despite normal blood glucose levels. This occurrence results from a specific tubular lesion impairing glucose reabsorption, rendering renal glycosuria a benign condition. Notably, glycosuria may also manifest in Fanconi syndrome.

Generalized aminoaciduria

Proximal renal tubular dysfunction leads to the excretion of multiple amino acids in urine due to defective tubular reabsorption.